Indoor obstacle detection system based on monocular vision and ultrasound applied to an intelligent car

-

摘要:

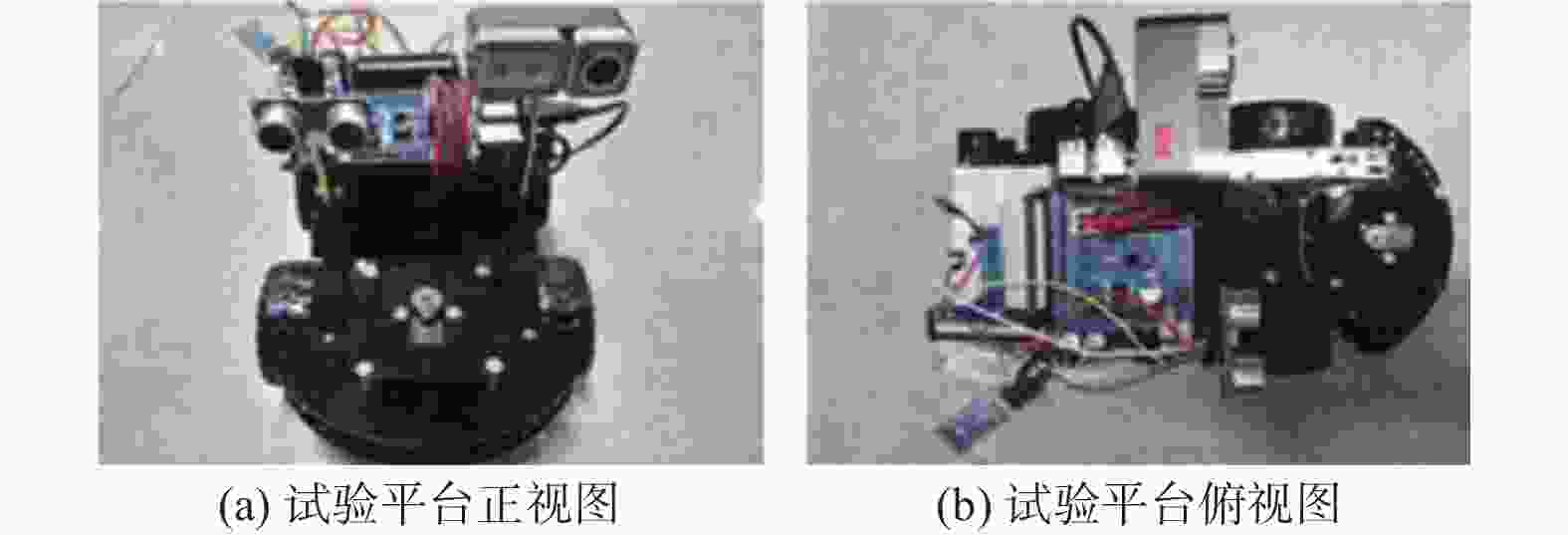

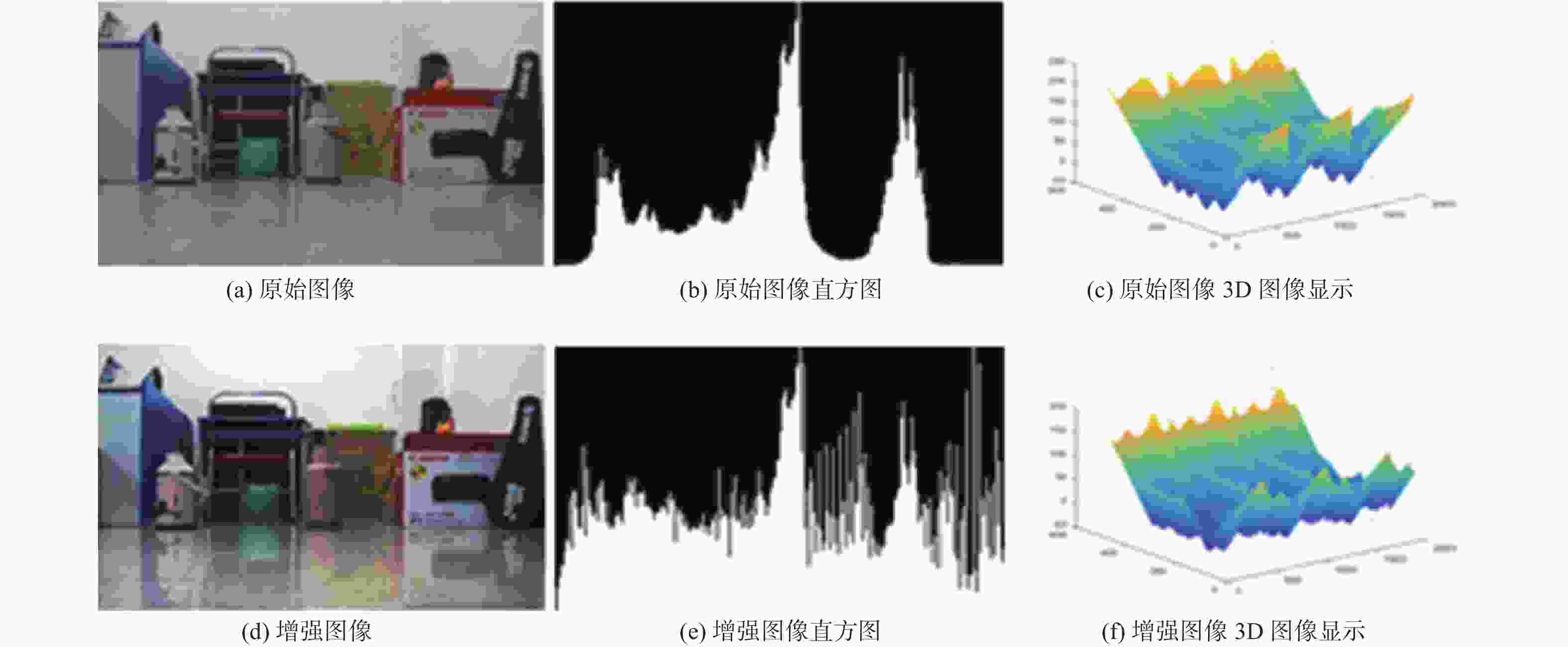

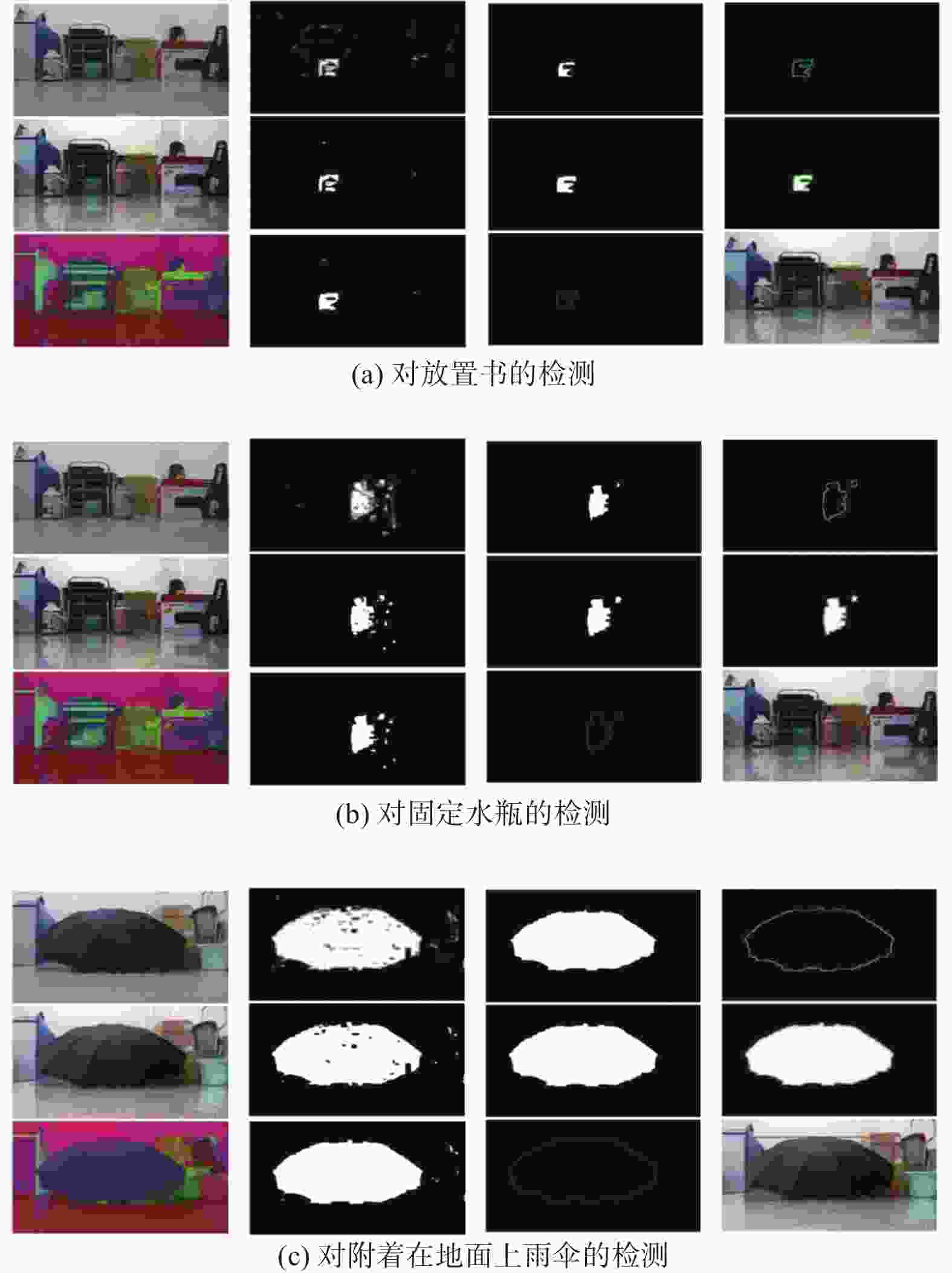

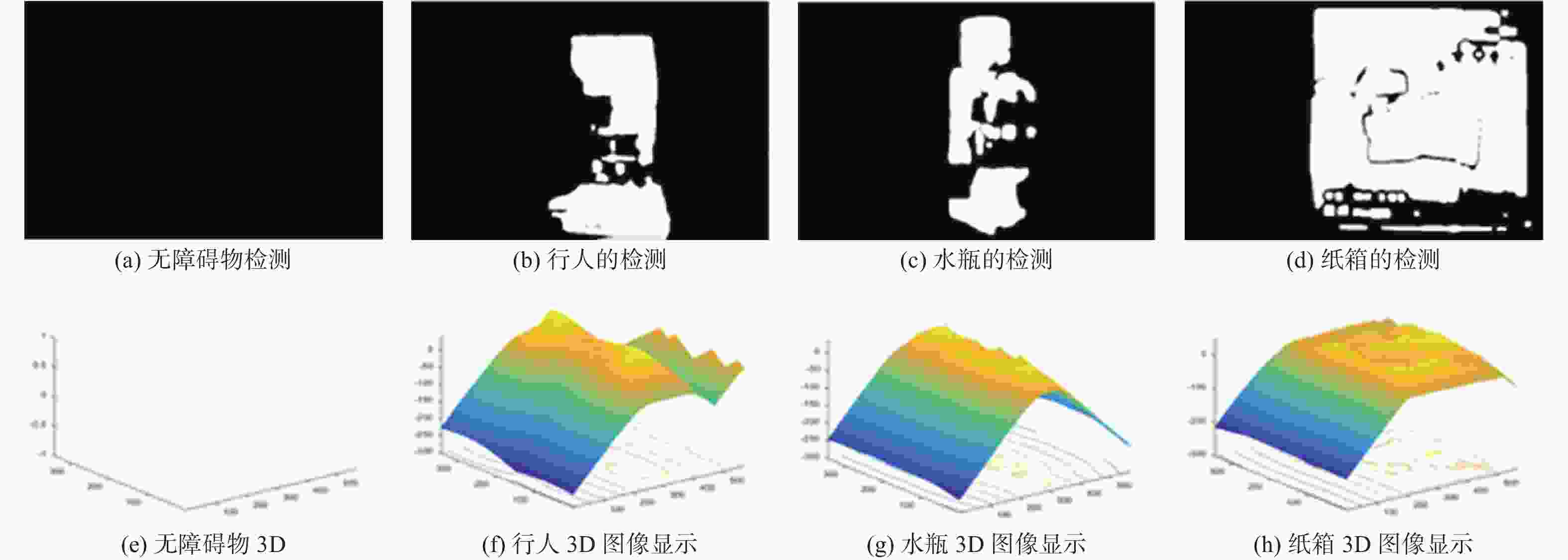

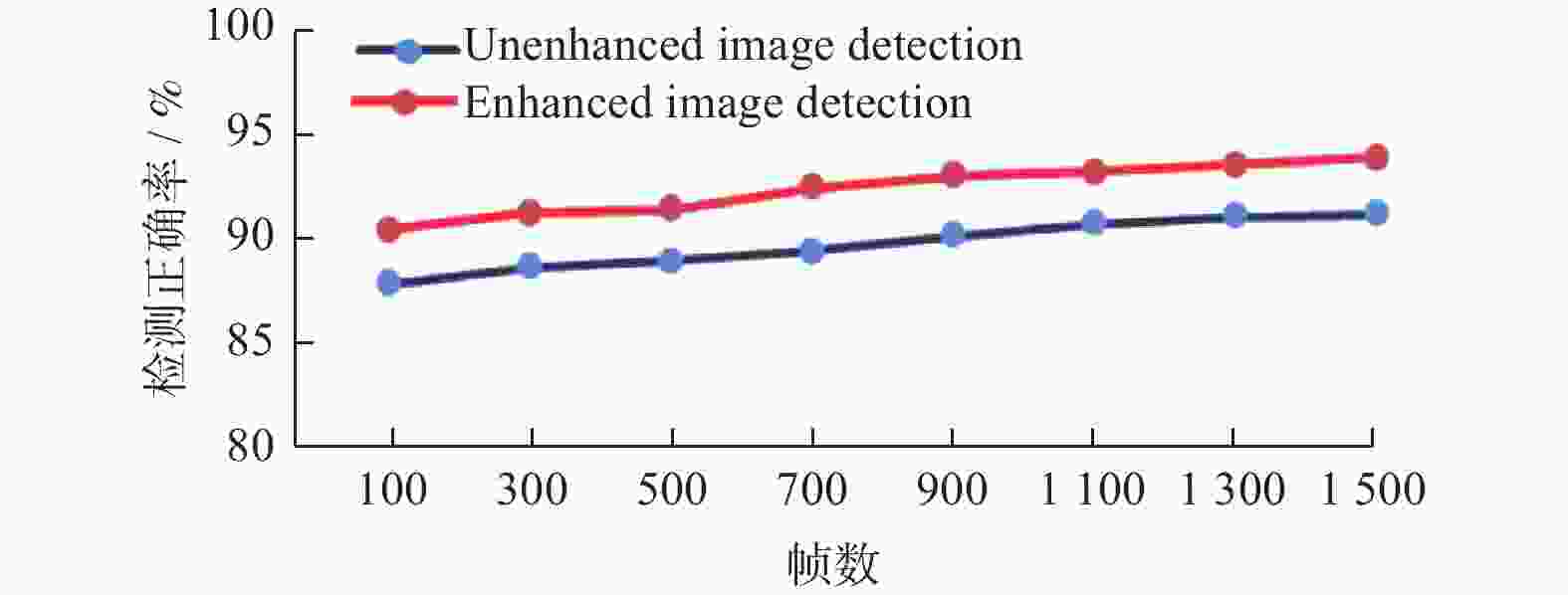

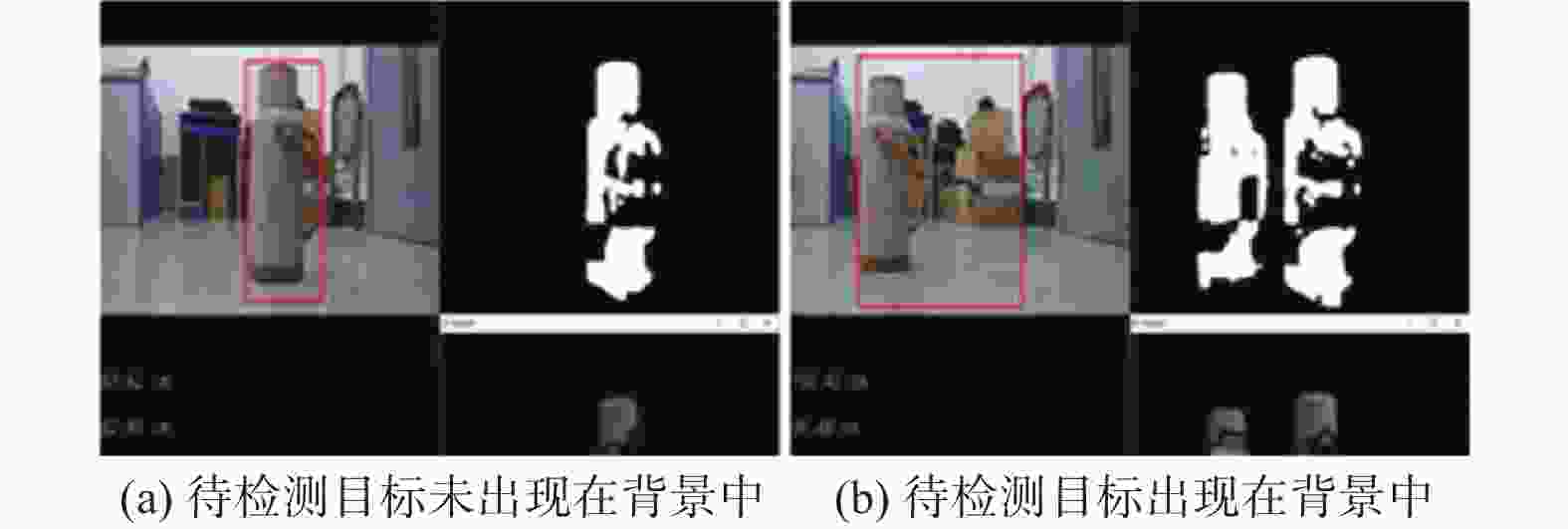

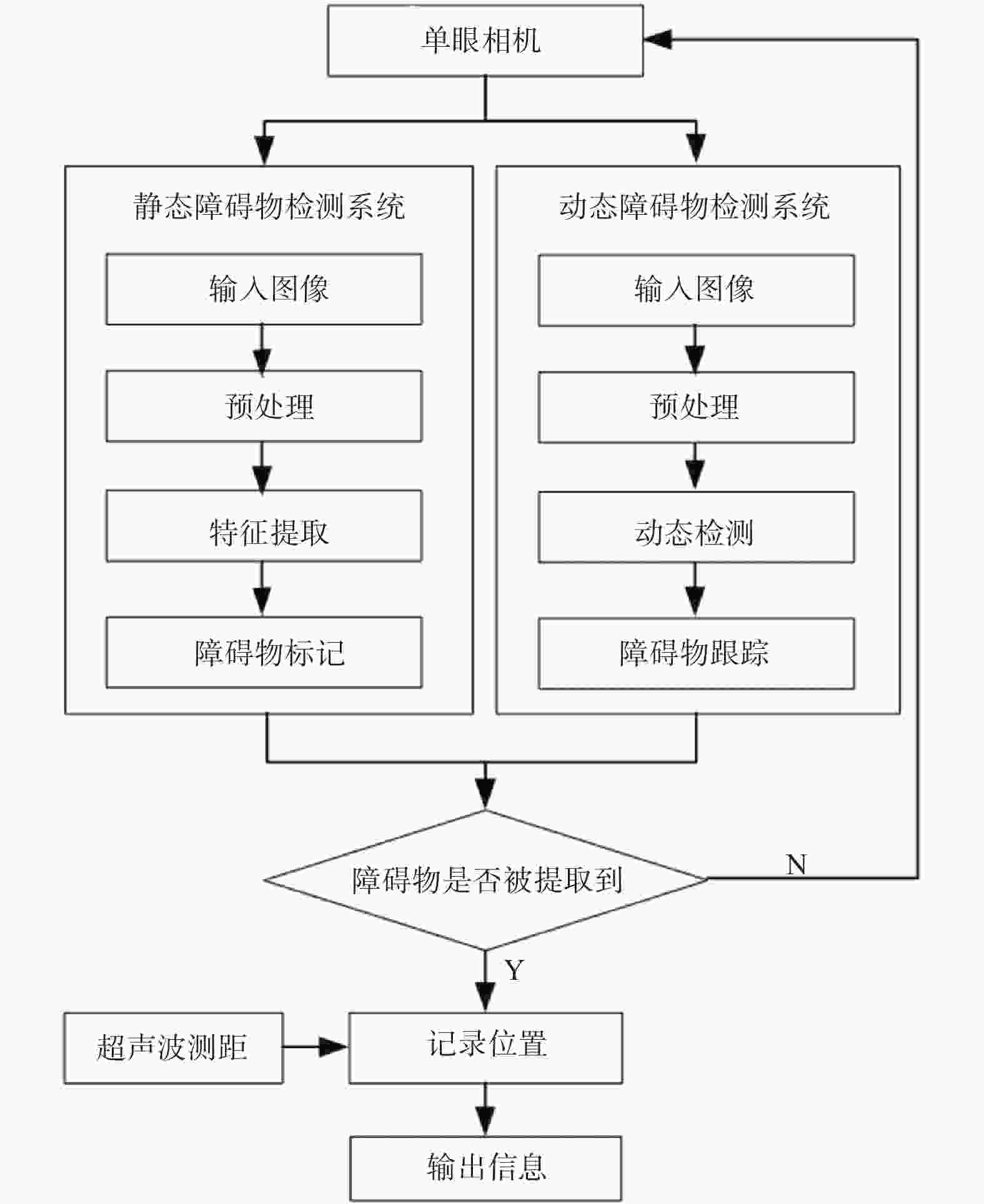

提出一种基于单眼视觉和超声波测距的树莓派智能机器人车检测静态和动态障碍物的方法. 采用改进的单眼视觉障碍物检测算法,对室内的静态和动态障碍物进行轮廓检测,并利用超声波传感器测量机器人车与障碍物之间的距离. 针对静态障碍物检测,在图像预处理阶段引入图像增强,并通过HSV图像提取不同障碍物颜色特征,以提高障碍物轮廓标定的效率和准确率. 针对动态障碍物检测,结合背景差分与3D图像显示技术实现动态目标捕捉,并设置距离决策模块记录障碍物位置信息. 试验结果表明,该方法可有效减少障碍物检测的平均消耗时间以及障碍物位置信息的错误率,提高室内障碍物检测的效率和准确性.

Abstract:A method based on monocular vision and ultrasonic ranging for the intelligent Raspberry Pi robot for detecting static and dynamic obstacles was proposed. An improved monocular visual obstacle detection algorithm was applied to perform contour detection on indoor static and dynamic obstacles, the distance was measured between the robot car and obstacles with an ultrasonic sensor. For static obstacle detection, image enhancement was introduced in the image preprocessing stage, and different obstacle color features were extracted through HSV images to improve the efficiency and accuracy of obstacle contour calibration. For dynamic obstacle detection, background difference was combined with 3D image display technology to achieve dynamic target capture, and a distance decision module was set up to record obstacle location information. The experimental results show that the method can effectively reduce the average consumption time of obstacle detection, and improve the accuracy of indoor obstacle detection.

-

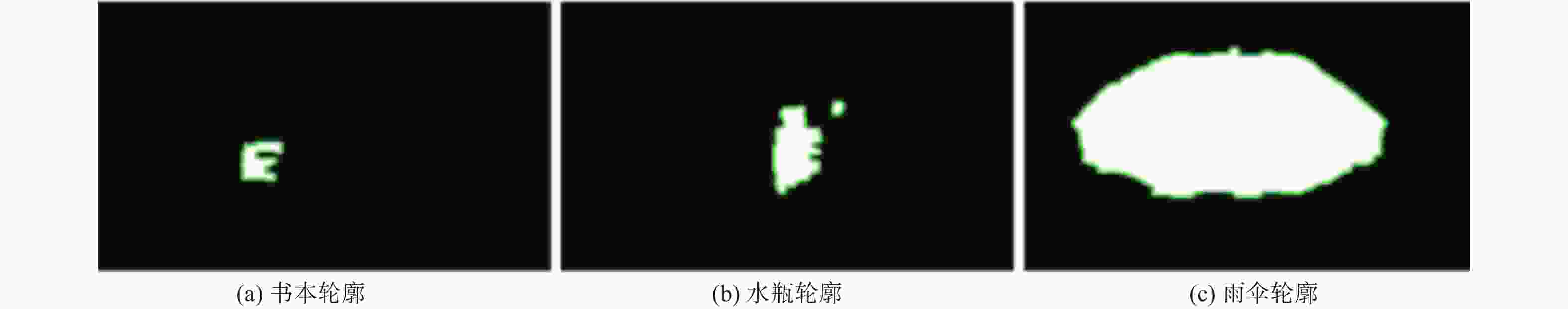

表 1 静态障碍物到智能车的距离

Table 1. Distances from static obstacles to smart car

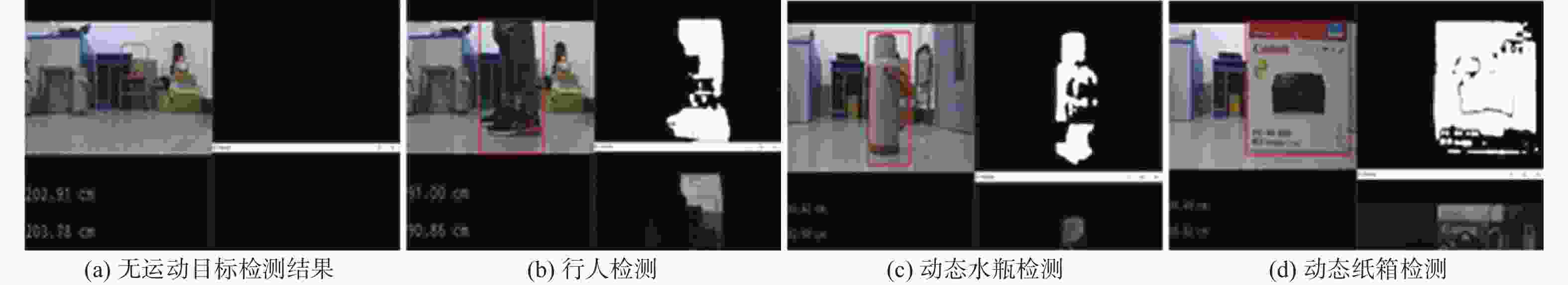

cm 障碍物 书 水瓶 雨伞 距离 182.36 153.66 112.52 表 2 动态障碍物与智能车之间的距离

Table 2. Distance between dynamic obstacle and smart car

cm 障碍物 行人 移动的水瓶 移动的纸箱 距离 90.86 82.99 85.02 -

[1] OHYA I, KOSAKA A, KAK A. Vision-based navigation by a mobile robot with obstacle avoidance using single-camera vision and ultrasonic sensing[J] . IEEE Transactions on Robotics and Automation,1998,14(6):969 − 978. doi: 10.1109/70.736780 [2] XU Z, MIN B, CHEUNG R. A robust background initialization algorithm with superpixel motion detection[J] . Signal Processing: Image Communication,2019,71:1 − 12. doi: 10.1016/j.image.2018.07.004 [3] QIU K J, LIU T B, SHEN S J. Model-based global localization for aerial robots using edge aligmennt[J] . IEEE Robotics and Automation Letters,2017,2(3):1256 − 1263. doi: 10.1109/LRA.2017.2660063 [4] CAI S Z, HUANG Y B, YE B, et al. Dynamic illumination optical flow computing for sensing multiple mobile robots from a drone[J] . IEEE Transactions on Systems, Man, and Cybernetics: Systems,2018,48(8):1370 − 1382. [5] CAI G R, SU S Z, HE W L, et al. Combining 2D and 3D features to improve road detection based on stereo cameras[J] . IET Computer Vision,2018,12(6):834 − 843. doi: 10.1049/iet-cvi.2017.0266 [6] STOLOJESCU-CRISAN C, HOLBAN S. A Comparison of X-ray image segmentation techniques[J] . Advances in Electrical & Computer Engineering,2013,13(3):85 − 92. [7] ZHAO J P, ZHANG Z H, YU W X, et al. A cascade coupled convolutional neural network guided visual attention method for ship detection from SAR images[J] . IEEE Access,2018,6:50693 − 50708. doi: 10.1109/ACCESS.2018.2812929 [8] GARCIA F, SCHOCKAERT C, MIRBACH B. Real-time visualization of low contrast targets from high-dynamic range infrared images based on temporal digital detail enhancement filter[J] . Journal of Electronic Imaging,2015,24(6):061103. doi: 10.1117/1.JEI.24.6.061103 [9] CHEN Z, WANG S W, YIN F L. A time delay estimation method based on wavelet transform and speech envelope for distributed microphone arrays[J] . Advances in Electrical and Computer Engineering,2013,13(3):39 − 44. doi: 10.4316/AECE.2013.03007 [10] FAN L, XIA G Q, TANG X, et al. Tunable ultra-broadband microwave frequency combs generation based on a current modulated semiconductor laser under optical injection[J] . IEEE Access,2017,5:17764 − 17771. doi: 10.1109/ACCESS.2017.2737665 [11] XU H Y, YU M, LUO T, et al. Parts-based stereoscopic image assessment by learning binocular manifold color visual properties[J] . Journal of Electronic Imaging,2016,25(6):1 − 10. [12] ZHU X W, LEI X S, SUI Z H, et al. Research on detection method of UAV obstruction based on binocular vision[C]// Proceedings of the 2nd International Conference on Advances in Materials, Machinery, Electronics. Xi'an: AMME, 2018: 040010. [13] ZHANG E S, WANG S B. Plane-space algorithm based on binocular stereo vision with its estimation of range and measurement boundary[J] . IEEE Access,2018,6:62450 − 62457. doi: 10.1109/ACCESS.2018.2875760 [14] FLACCO F, DE LUCA A. Real-time computation of distance to dynamic obstacles with multiple depth sensors[J] . IEEE Robotics & Automation Letters,2017,2(1):56 − 63. [15] ZHANG T Y, HU H M, LI B. A naturalness preserved fast dehazing algorithm using HSV color space[J] . IEEE Access,2018,6:10644 − 10649. [16] YOON I, KIM S, KIM D, et al. Adaptive defogging with color correction in the HSV color space for consumer surveillance system[J] . IEEE Transactions on Consumer Electronics,2012,58(1):111 − 116. doi: 10.1109/TCE.2012.6170062 [17] WO Y, CHEN X, HAN G Q. A saliency detection model using aggregation degree of color and texture[J] . Signal Processing: Image Communication,2015,30:121 − 136. doi: 10.1016/j.image.2014.10.004 [18] DAS P, KIM B Y, PARK Y, et al. A new color space based constellation diagram and modulation scheme for color independent VLC[J] . Advances in Electrical & Computer Engineering,2012,12(4):11 − 18. [19] TSAI V J. A comparative study on shadow compensation of color aerial images in invariant color models[J] . IEEE Transactions on Geoscience & Remote Sensing,2006,44(6):1661 − 1671. [20] RIAD R, HARBA R, DOUZI H, et al. Robust fourier watermarking for id images on smart card plastic supports[J] . Advances in Electrical & Computer Engineering,2016,16(4):21 − 28. [21] BAO P, ZHANG L, WU X L. Canny edge detection enhancement by scale multiplication[J] . IEEE Transactions on Pattern Analysis and Machine Intelligence,2005,27(9):1485 − 1490. doi: 10.1109/TPAMI.2005.173 [22] ZHANG X G, HUANG T J, TIAN Y H, et al. Background-modeling-based adaptive prediction for surveillance video coding[J] . IEEE Transactions on Image Processing,2014,23(2):769 − 784. doi: 10.1109/TIP.2013.2294549 -

下载:

下载: