Improved weed identification algorithm based on YOLOv5-SPD

-

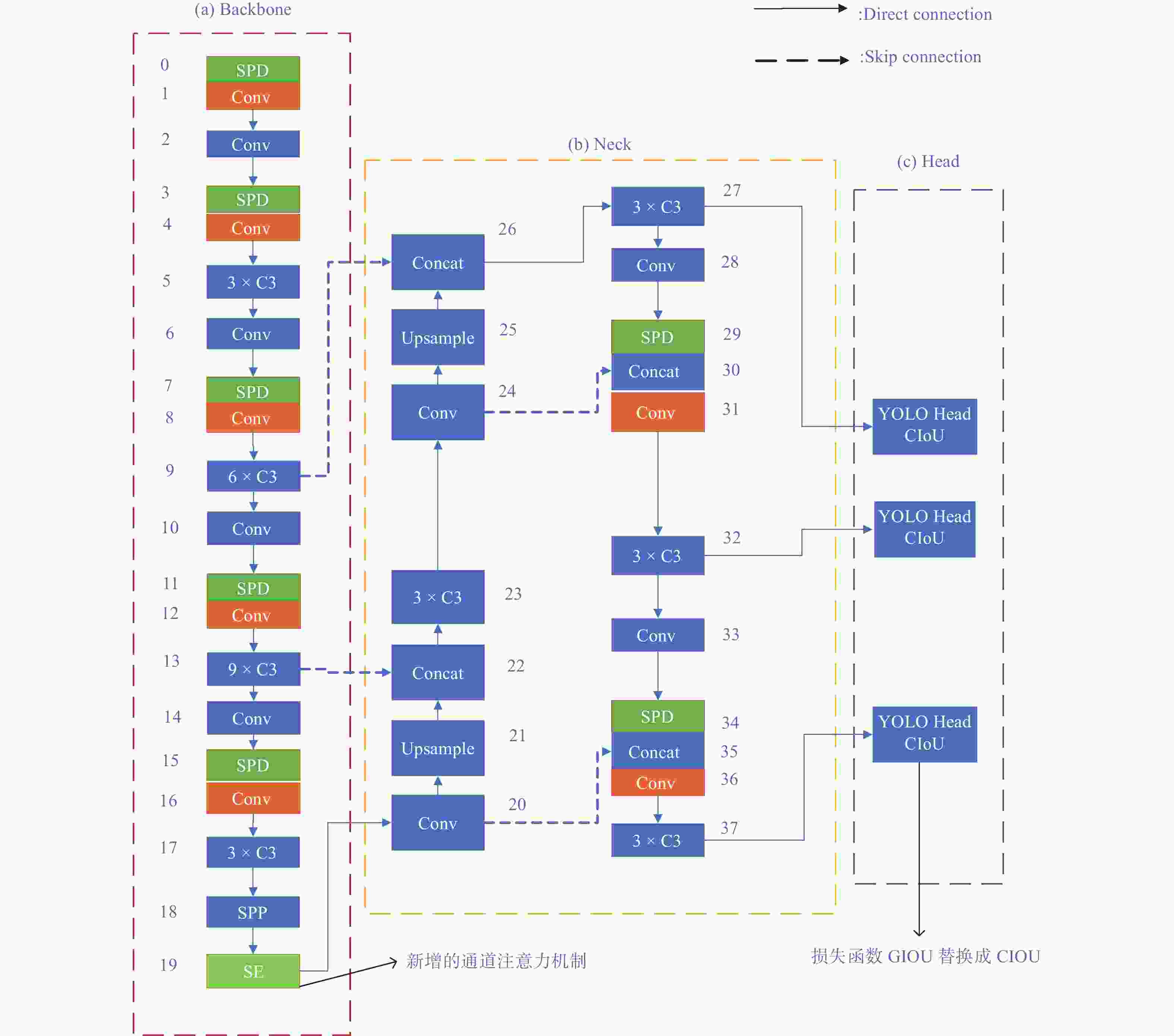

摘要: 杂草的精确识别是实现机器代替人工除草的首要前提。初生的杂草目标小,识别难度大。YOLOv5-SPD在小目标识别上有着良好的表现,但在稳健性及准确性上还有待提高。在YOLOv5-SPD基础上加入通道注意力机制可以加强有效特征的权重值,使网络的学习更具有针对性。同时将广义交并比(GIoU)损失函数替换成完全交并比(CIoU)损失函数,可有效解决边框重合关系问题和目标框与预测框的高宽比以及中心点之间的关系,使杂草预测框更加接近真实框。杂草数据集上的试验结果表明,改进后的网络检测精度达到70.3%,准确率达到94.1%,比原来的YOLOv5-SPD分别提高4.7%和2.8%。

-

关键词:

- 注意力机制 /

- 边界损失函数 /

- YOLOv5-SPD算法 /

- 杂草识别

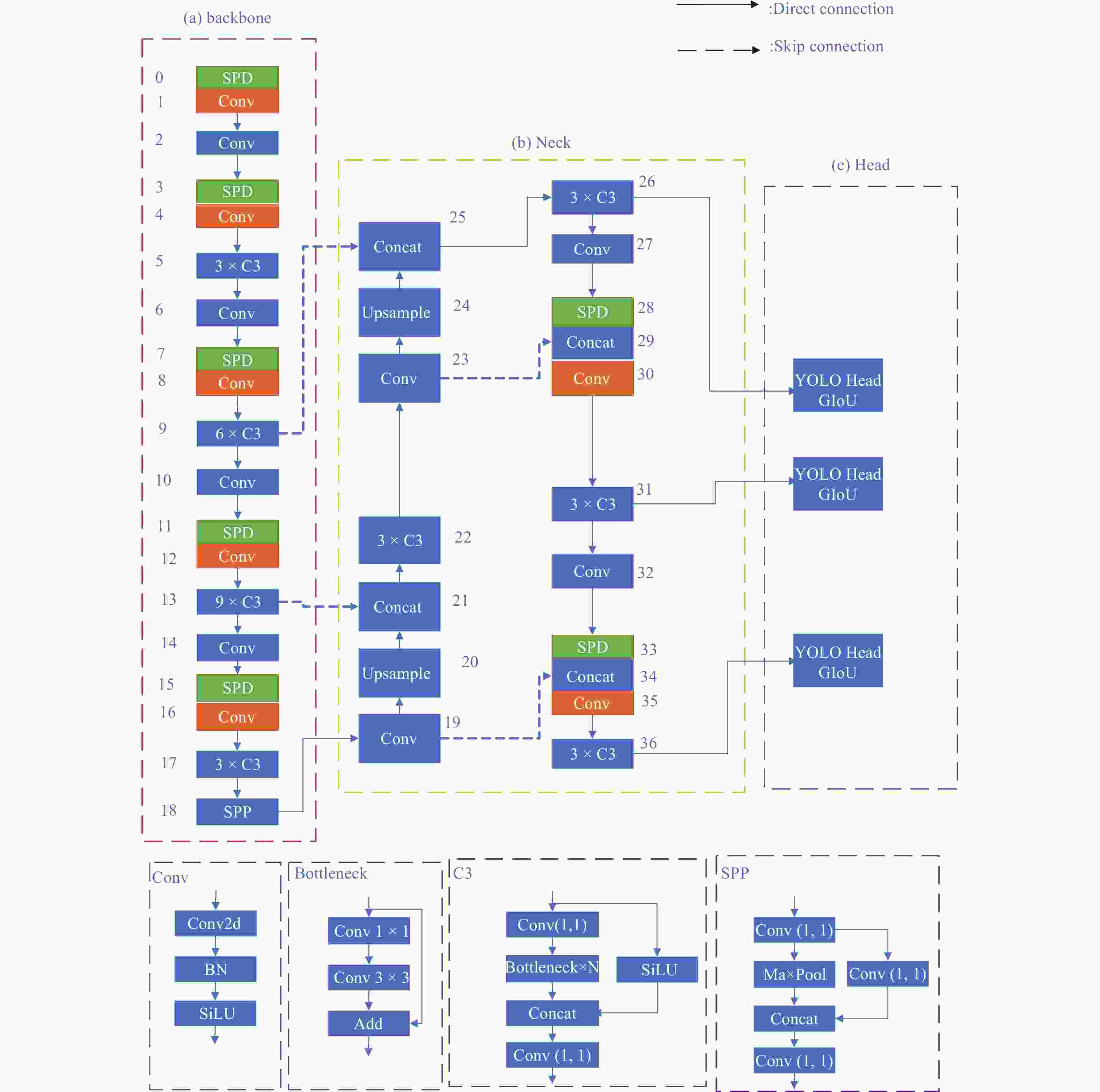

Abstract: Accurate identification of weeds is the primary prerequisite for achieving machine replacement of manual weeding. The target of nascent weeds is small, making identification difficult. YOLOv5-SPD has good performance in small target recognition, but its robustness and accuracy still need to be improved. Adding channel attention mechanism on the basis of YOLOv5-SPD can strengthen the weight value of effective features, making the learning of the network more targeted. At the same time, replacing the generalized intersection over union (GIoU) loss function with complete intersection over union (CIoU) can effectively solve the problem of border coincidence, the height width ratio of the target box and the prediction box, and the relationship between the center point, there by making the weed prediction box closer to the real box. The experimental results on the weed dataset show that the improved network detection accuracy reaches 70.3% with an accuracy rate of 94.1% which is 4.7% and 2.8% higher than the original YOLOv5-SPD.-

Key words:

- attention mechanism /

- boundary loss function /

- YOLOv5-SPD algorithm /

- weed identification

-

表 1 不同目标检测模型性能对比

Table 1. Performance Comparison of different target detection models

模型 Precision/% mAP(0.5)

/%Recall

/%Faster R-CNN 83.4 57.9 81.7 CenterNet 87.7 61.3 85.9 YOLOv3 89.5 63.2 87.2 YOLOv5-SPD 91.3 65.6 89.0 本研究改进模型 94.1 70.3 93.0 表 2 采用不同策略改进的网络之间的对比

Table 2. Comparison between networks improved by different strategies

网络模型 SE GIoU CIoU Precision/% mAP

(0.5)

/%Recall

/%YOLOv5-SPD-GIoU × √ × 91.3 65.6 89 YOLOv5-SPD-CIoU × × √ 93.4 65.8 90 YOLOv5-SPD-SE-GIoU √ √ × 93.9 66.2 92 YOLOv5-SPD-SE-CIoU √ × √ 94.1 70.3 93 -

[1] HAMUDA E, MC GINLEY B, GLAVIN M, et al. Automatic crop detection under field conditions using the HSV colour space and morphological operations[J] . Computers and Electronics in Agriculture,2017,133:97 − 107. doi: 10.1016/j.compag.2016.11.021 [2] SHARPE S M, SCHUMANN A W, BOYD N S. Goosegrass detection in strawberry and tomato using a convolutional neural network[J] . Scientific Reports,2020,10(1):9548. doi: 10.1038/s41598-020-66505-9 [3] CHO S I, LEE D S, JEONG J Y. AE-automation and emerging technologies: Weed-plant discrimination by machine vision and artificial neural network[J] . Biosystems Engineering,2002,83(3):275 − 280. doi: 10.1006/bioe.2002.0117 [4] 姜红花, 张传银, 张昭, 等. 基于Mask R-CNN的玉米田间杂草检测方法[J] . 农业机械学报,2020,51(6):220 − 228, 247. [5] 孟庆宽, 张漫, 杨晓霞, 等. 基于轻量卷积结合特征信息融合的玉米幼苗与杂草识别[J] . 农业机械学报,2020,51(12):238 − 245. doi: 10.6041/j.issn.1000-1298.2020.12.026 [6] 东辉, 陈鑫凯, 孙浩, 等. 基于改进YOLOv4和图像处理的蔬菜田杂草检测[J] . 图学学报,2022,43(4):559 − 569. [7] JIAO L, ZHANG F, LIU F, et al. A survey of deep learning-based object detection[J] . IEEE Access,2019,7:128837 − 128868. doi: 10.1109/ACCESS.2019.2939201 [8] SHIH K H, CHIU C T, LIN J A, et al. Real-time object detection with reduced region proposal network via multi-feature concatenation[J] . IEEE Transactions on Neural Networks and Learning Systems,2019,31(6):2164 − 2173. [9] HE K, GKIOXARI G, Dollár P, et al. Mask R-CNN[J] . IEEE Transactions on Pattern Analysis and Machine Intelligence,2018,42(2):386 − 397. [10] REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: Unified, real-time object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2016: 779−788. [11] WANG Y, WANG C, ZHANG H, et al. Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery[J] . Remote Sensing,2019,11(5):531. doi: 10.3390/rs11050531 [12] DUAN K , BAI S , XIE L , et al. CenterNet: Keypoint Triplets for Object Detection[C]// Proceedings of International Conference on Computer Vision. Washington: IEEE Press, 2019: 6569−6578. [13] SUNKARA R, LUO T. No more strided convolutions or pooling: a new CNN building block for low-resolution images and small objects[C]//Proceedings of European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases. Grenoble: Springer, 2022: 443−459. [14] REZATOFIGHI H, TSOI N, GWAK J Y, et al. Generalized intersection over union: A metric and a loss for bounding box regression[C]//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach: IEEE, 2019: 658−666. [15] ZHENG Z H, WANG P, LIU W, et al. Distance-IoU loss: Faster and better learning for bounding box regression[C]//Proceedings of the AAAI conference on artificial intelligence. Glasgow: AAAI, 2020: 12993−13000. [16] JIE H, LI S, GANG S, et al. Squeeze-and-excitation networks[J] . IEEE Transactions on Pattern Analysis and Machine Intelligence,2017,42(8):2011 − 2023. -

下载:

下载: