Regional attention selection and feature reinforcement for occluded person re-identification

-

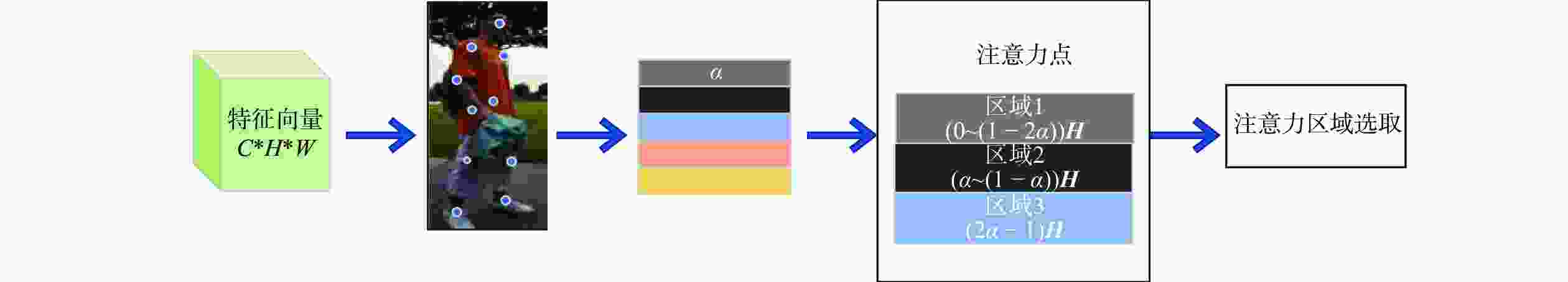

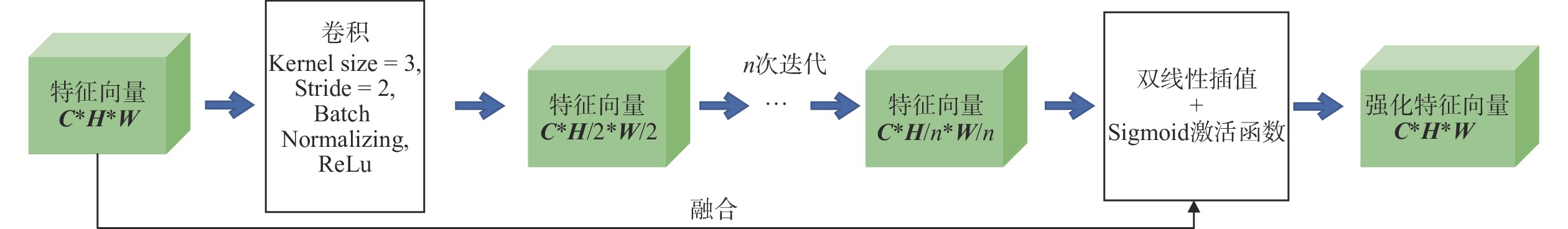

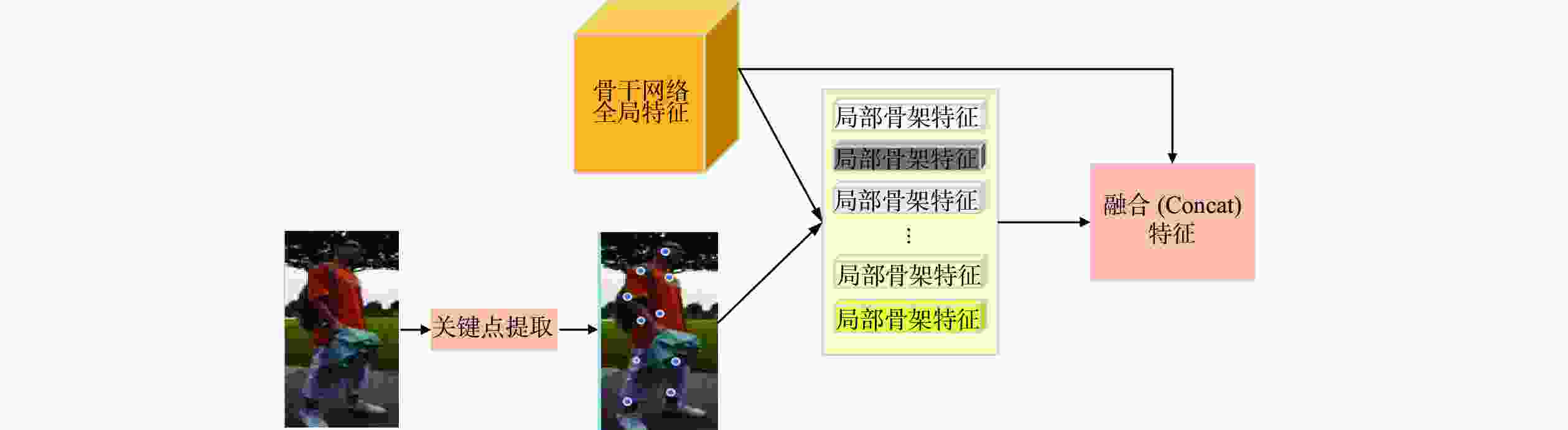

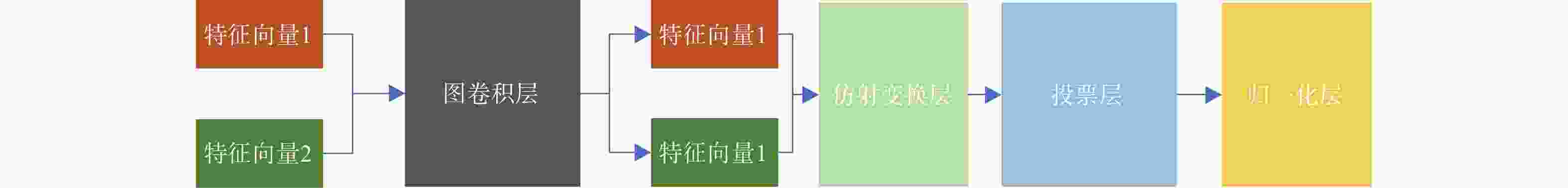

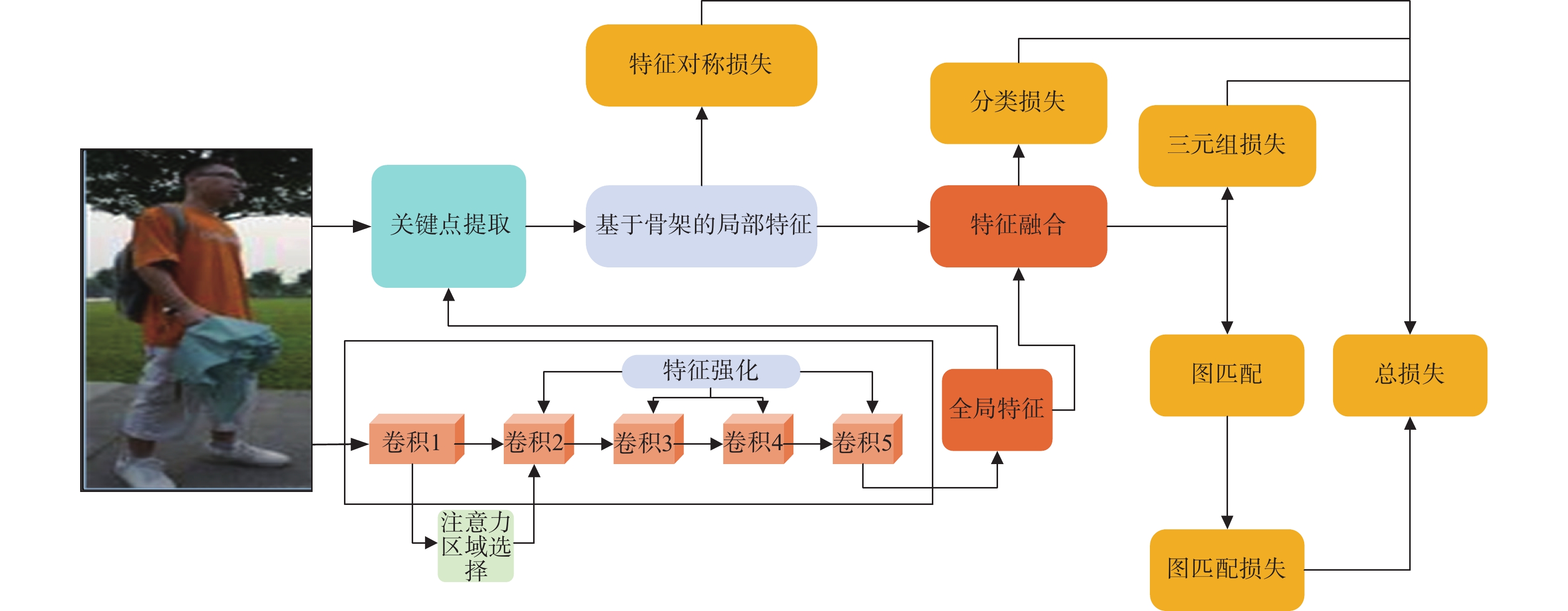

摘要: 遮挡行人重识别(ReID)在实际应用面临的主要问题包括提取和匹配过程中行人特征不完整和含有噪声,这要求模型必须具有更高的稳定性。采用深度学习技术构建ReID模型,并加入区域注意力选择和特征强化两个模块。前者可以从原始图像中自适应选择感兴趣区域,后者用于区分人物特征并在匹配过程中进行加权。消融分析证明这两个模块是可嵌入的,有利于遮挡行人重识别任务,在Occluded-Duke和Occluded-ReID数据集上分别达到55.9%和79.1%的Rank-1准确率。Abstract: Occluded person re-identification (ReID) in practical applications faces the main challenges of incomplete or noisy pedestrian features during the extraction and matching processes, which necessitates models with higher stability. Deep learning techniques were employed to construct the ReID model, which incorporates two modules: region attention selection and feature enhancement. The former adaptively selects regions of interest from the original image, while the latter distinguishes human features and applies weighting during the matching process. The ablation analysis demonstrates that these two modules are embeddable and beneficial to occluded pedestrian re-identification task. It can achieve Rank-1 accuracy rates of 55.9% and 79.1% on the Occluded-Duke and Occluded-ReID datasets, respectively.

-

Key words:

- deep learning /

- neural networks /

- person re-identification (ReID) /

- feature processing

-

表 1 各种方法在Occluded-Duke和Occluded-ReID数据集上的性能对比

Table 1. Performance comparison of various methods on Occluded-Duke and Occluded-ReID datasets

单位:% 方法 Occluded-Duke Occluded-ReID Rank-1 mAP Rank-1 mAP Part-Aligned 28.8 20.2 — — PCB 42.6 33.7 41.3 38.9 Part Bilinear 36.9 — — — FD-GAN 40.8 — — — AMC + SWM — — 31.2 27.3 DSR 40.8 30.4 72.8 62.8 SFR 42.3 32 — — Ad-Occluded 44.5 32.2 — — VIT Base 59.9 52.3 81.2 76.7 FPR — — 78.3 68.0 PGFA 51.4 37.3 — — HOReID 55.1 43.8 80.3 70.2 RasFr-ReID (ours) 55.9 44.1 79.1 75.1 表 2 特征强化迭代次数与注意力区域选择参数α对性能的影响

Table 2. Effect of number of feature reinforcement iterations and attention region selection parameter α on performance

α 特征强化迭代/次 Occluded-ReID Conv2 Conv3 Conv4 Conv5 Rank-1/% Rank-3/% mAP/% 0 0 0 0 0 75.6 80.0 73.5 0 4 4 2 2 76.1 79.7 72.5 0 8 4 2 2 77.4 80.4 74.6 0 8 4 4 2 76.7 81.1 74.8 0 8 8 4 2 76.7 81.4 73.2 0 16 8 4 2 76.4 80.7 73.4 0.01 8 4 2 2 77.4 81.4 74.3 0.02 8 4 2 2 79.1 85.1 75.1 0.05 8 4 2 2 78.0 82.4 75.3 -

[1] 董亚超, 刘宏哲, 包俊. 基于深度学习的行人重识别技术的研究进展[C] //中国计算机用户协会网络应用分会. 中国计算机用户协会网络应用分会2020年第二十四届网络新技术与应用年会论文集. 北京:北京联合大学北京市信息服务工程重点实验室, 2020: 5. DOI: 10.26914/c.cnkihy.2020.031794. [2] CAI H, WANG Z, CHENG J. Multi-scale body-part mask guided attention for person re-identification[C] //Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. Long Beach: IEEE, 2019. [3] ZHANG Z, LAN C, ZENG W, et al. Densely semantically aligned person re-identification[C] //Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach: IEEE/CVF, 2019: 667 − 676. [4] JIIN X, LAN C L, ZENG W J, et al. Semantics-aligned representation learning for person re-identification[EB/OL] . (2020-03-18)[2023-04-12] . https://doi.org/10.48550/arXiv.1905.13143. [5] HE L, LIAO X, LIU W, et al. Fastreid: A pytorch toolbox for general instance re-identification[C] //Proceedings of the 31st ACM International Conference on Multimedia. Ottawa: ACM, 2023: 9664 − 9667. [6] 陈琳. 行人重识别关键算法研究[D] . 上海:上海交通大学, 2021. [7] 霍东东, 杜海顺. 基于通道重组和注意力机制的跨模态行人重识别[J] . Laser & Optoelectronics Progress,2023,60(14):1410007 − 1410012. [8] LUO H, JIANG W, FAN X, et al. Stnreid: deep convolutional networks with pairwise spatial transformer networks for partial person re-identification[J] . IEEE Transactions on Multimedia,2020,22(11):2905 − 2913. doi: 10.1109/TMM.2020.2965491 [9] KORTYLEWSKI A, HE J, LIU Q, et al. Compositional convolutional neural networks: A deep architecture with innate robustness to partial occlusion[C] //Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle: IEEE/CVF, 2020: 8940 − 8949. [10] 郑泉石, 金城. 基于自适应预测的2D人体姿态估计[J] . 计算机科学,2023,50(S2):162 − 168. [11] 李昌华, 刘艺, 李智杰. 用于非精确图匹配的改进注意图卷积网络[J] . 小型微型计算机系统,2021,42(1):41 − 45. [12] MIAO J, WU Y, LIU P, et al. Pose-guided feature alignment for occluded person re-identification[C] //Proceedings of the IEEE/CVF international Conference on Computer Vision. Seoul: IEEE/CVF, 2019: 542 − 551. [13] SUN Y, ZHENG L, YANG Y, et al. Beyond part models: person retrieval with refined part pooling (and a strong convolutional baseline)[C] //Proceedings of the European conference on computer vision (ECCV). Munich: Springer, 2018: 480 − 496. [14] WANG G, YANG S, LIU H, et al. High-order information matters: learning relation and topology for occluded person re-identification[C] //Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition. Seoul: IEEE/CVF, 2020: 6449 − 6458. [15] ZANFIR A, SMINCHISESCU C. Deep learning of graph matching[C] //Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. Salt Lake City: IEEE, 2018: 2684 − 2693. [16] ZHUO J, CHEN Z, LAI J, et al. Occluded person re-identification[C] //2018 IEEE International Conference on Multimedia and Expo (ICME). San Diego: IEEE, 2018: 1 − 6. [17] SELVARAJU R R, COGSWELL M, DAS A, et al. Grad-cam: visual explanations from deep networks via gradient-based localization[C] //Proceedings of the IEEE International Conference on Computer Vision. Venice: IEEE, 2017: 618 − 626. -

下载:

下载: