An accuracy improvement method for automatic fiber placement defect recognition based on attention data enhancement

-

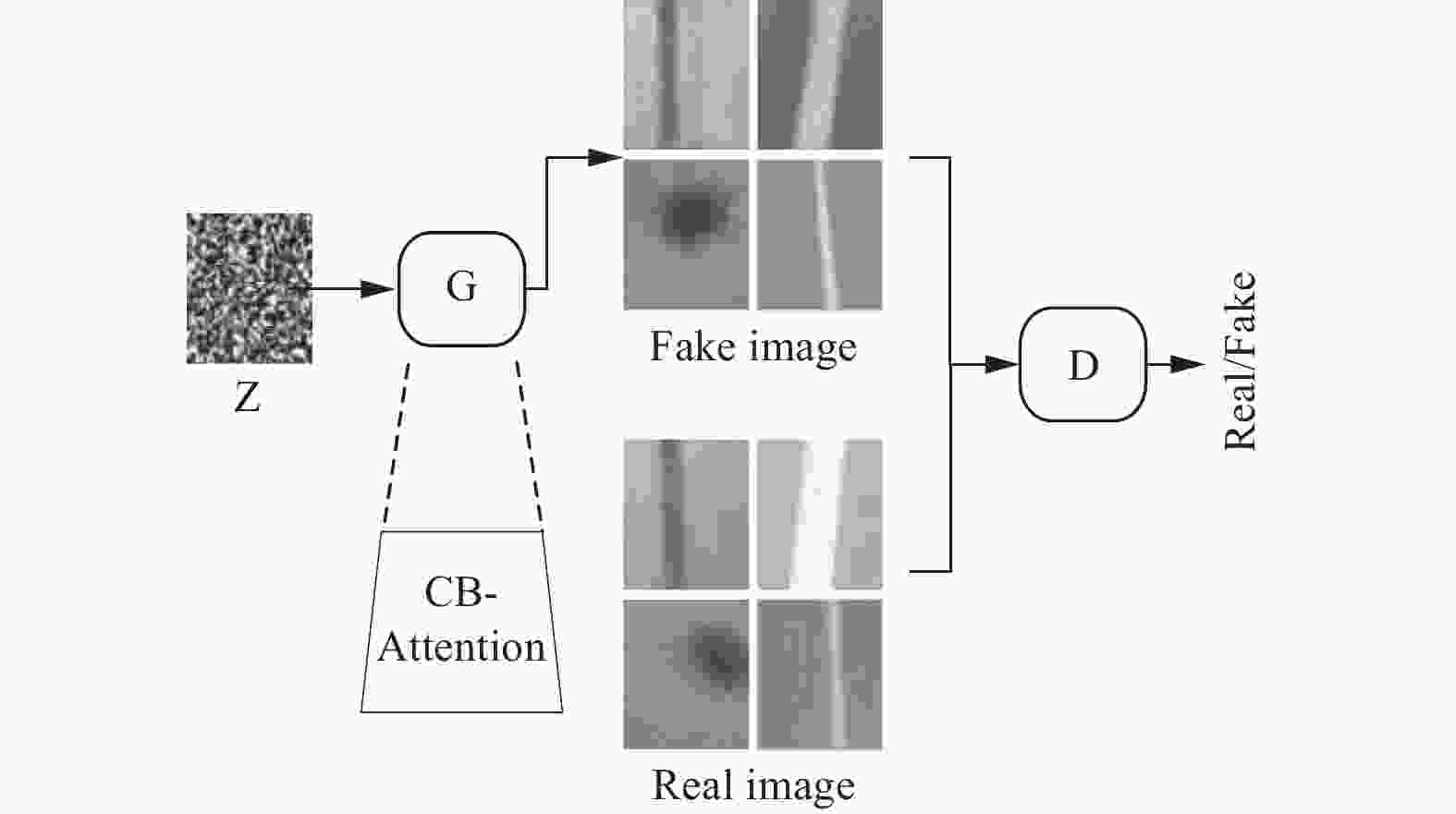

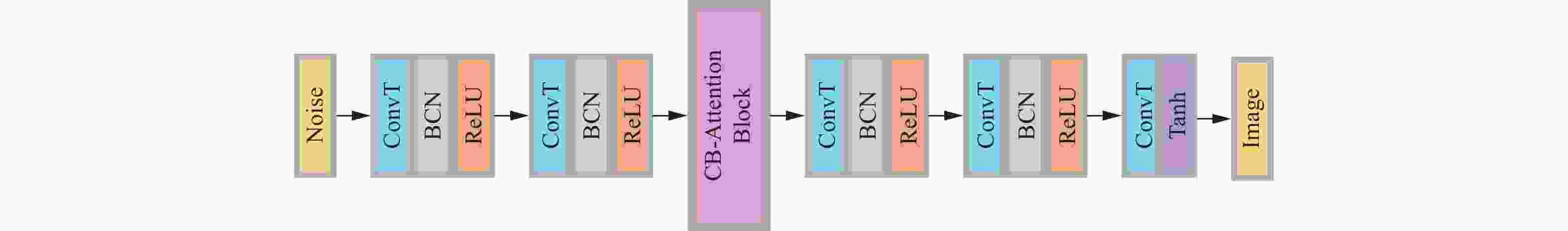

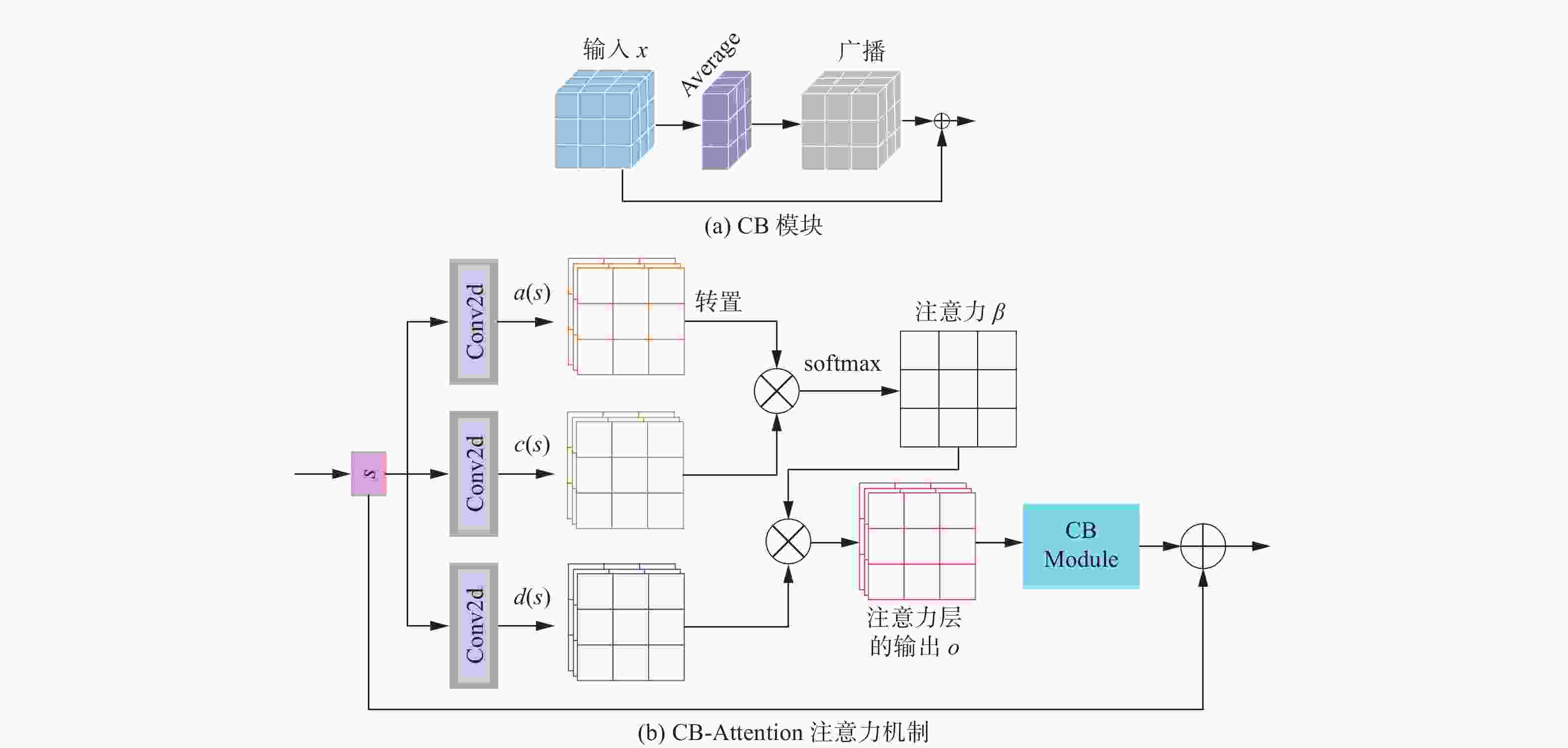

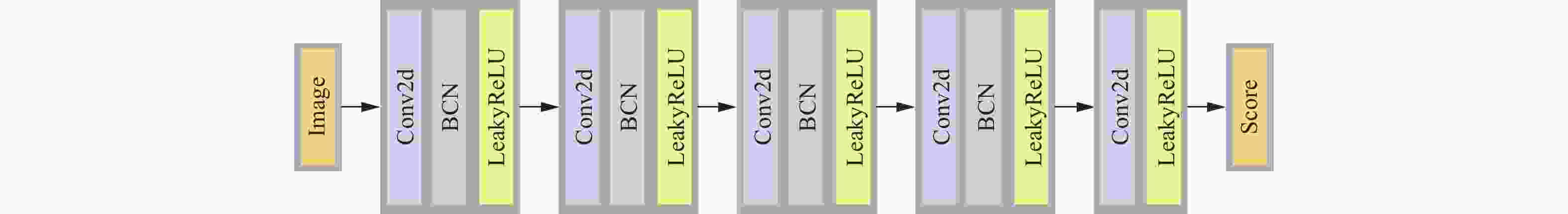

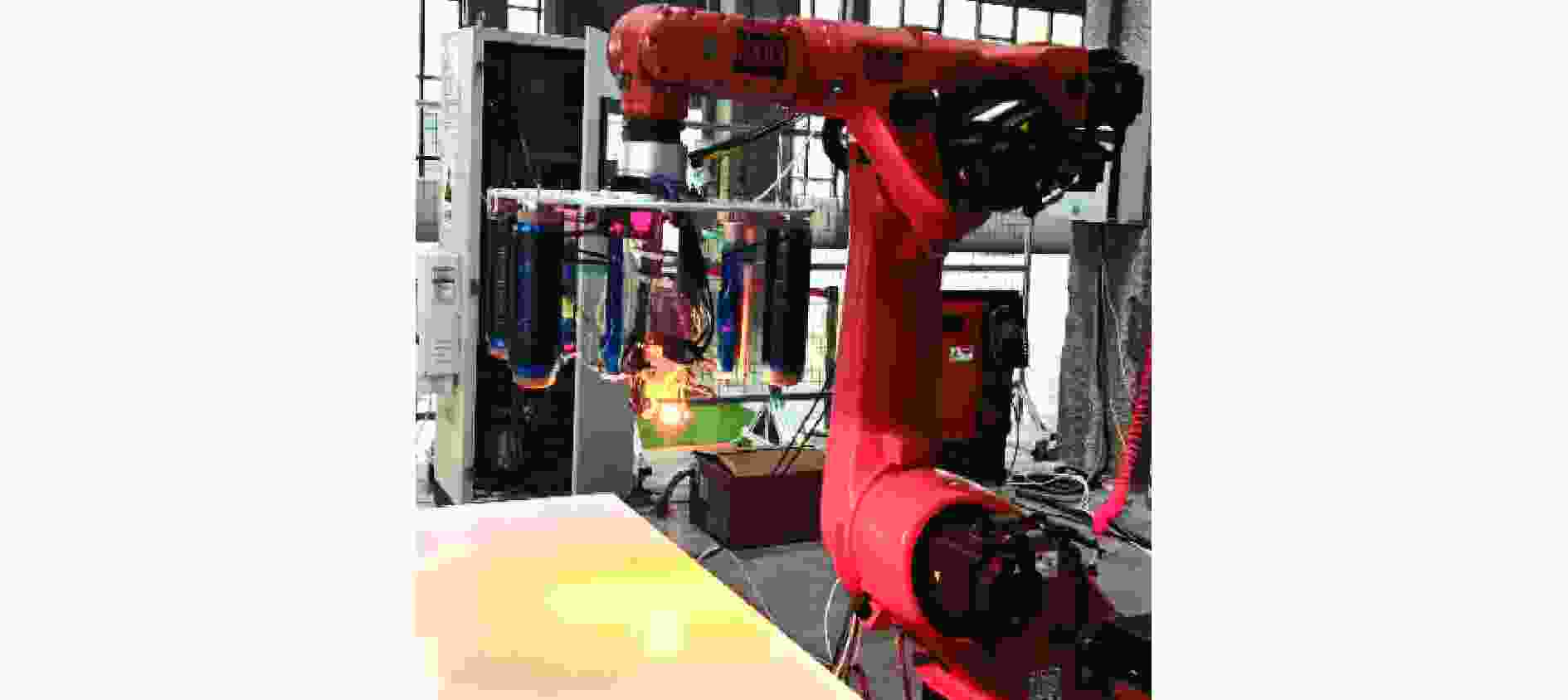

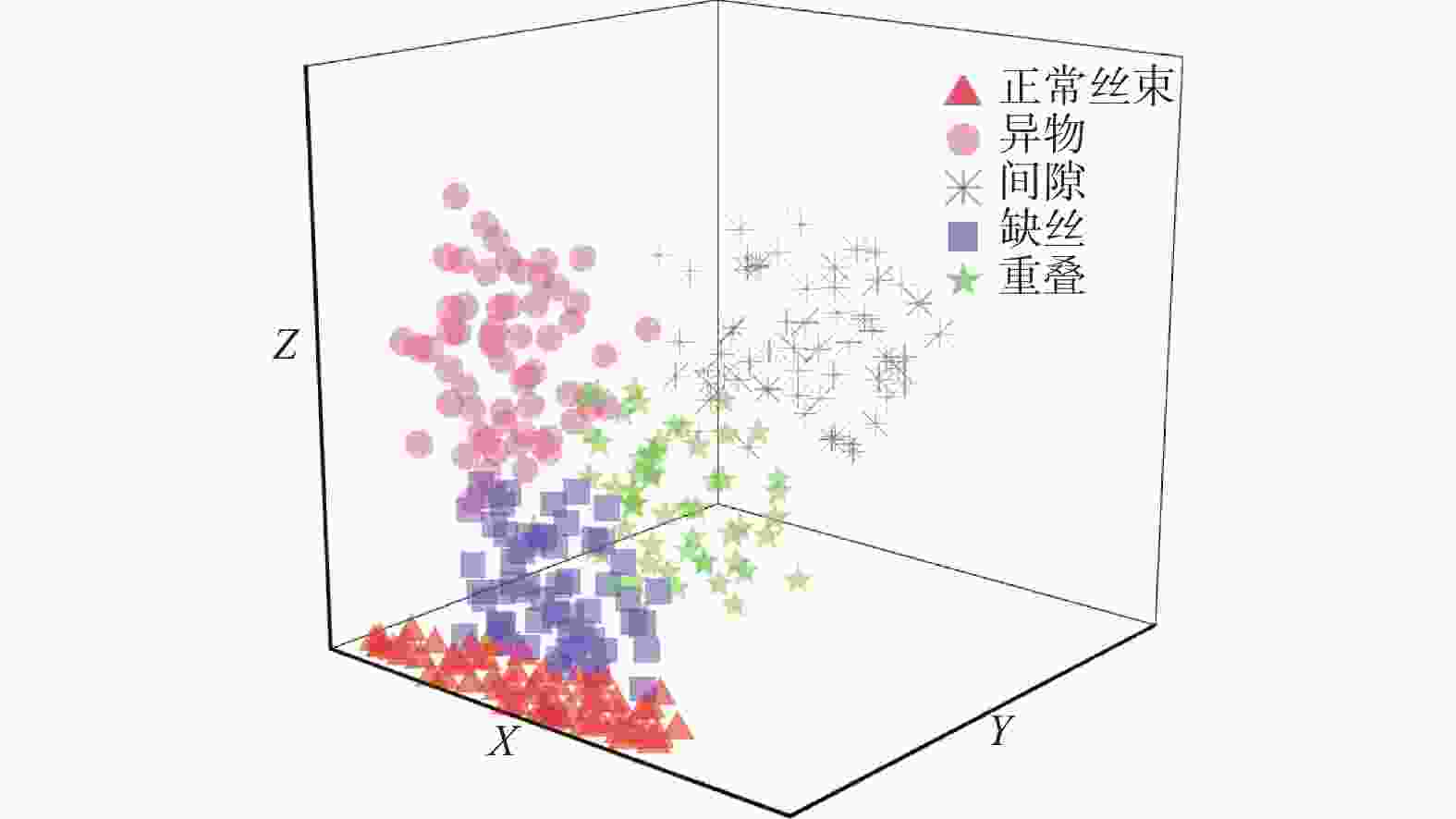

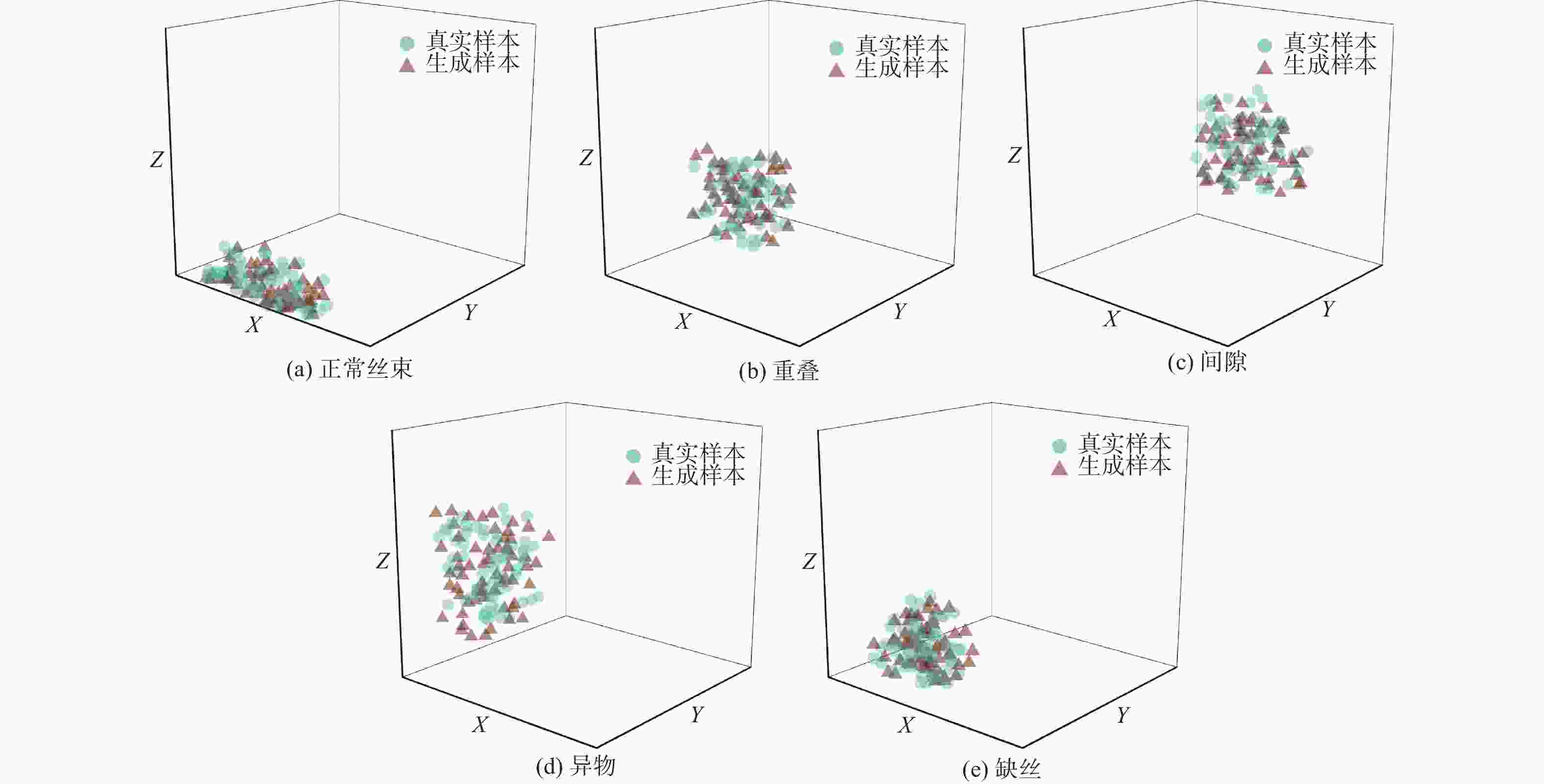

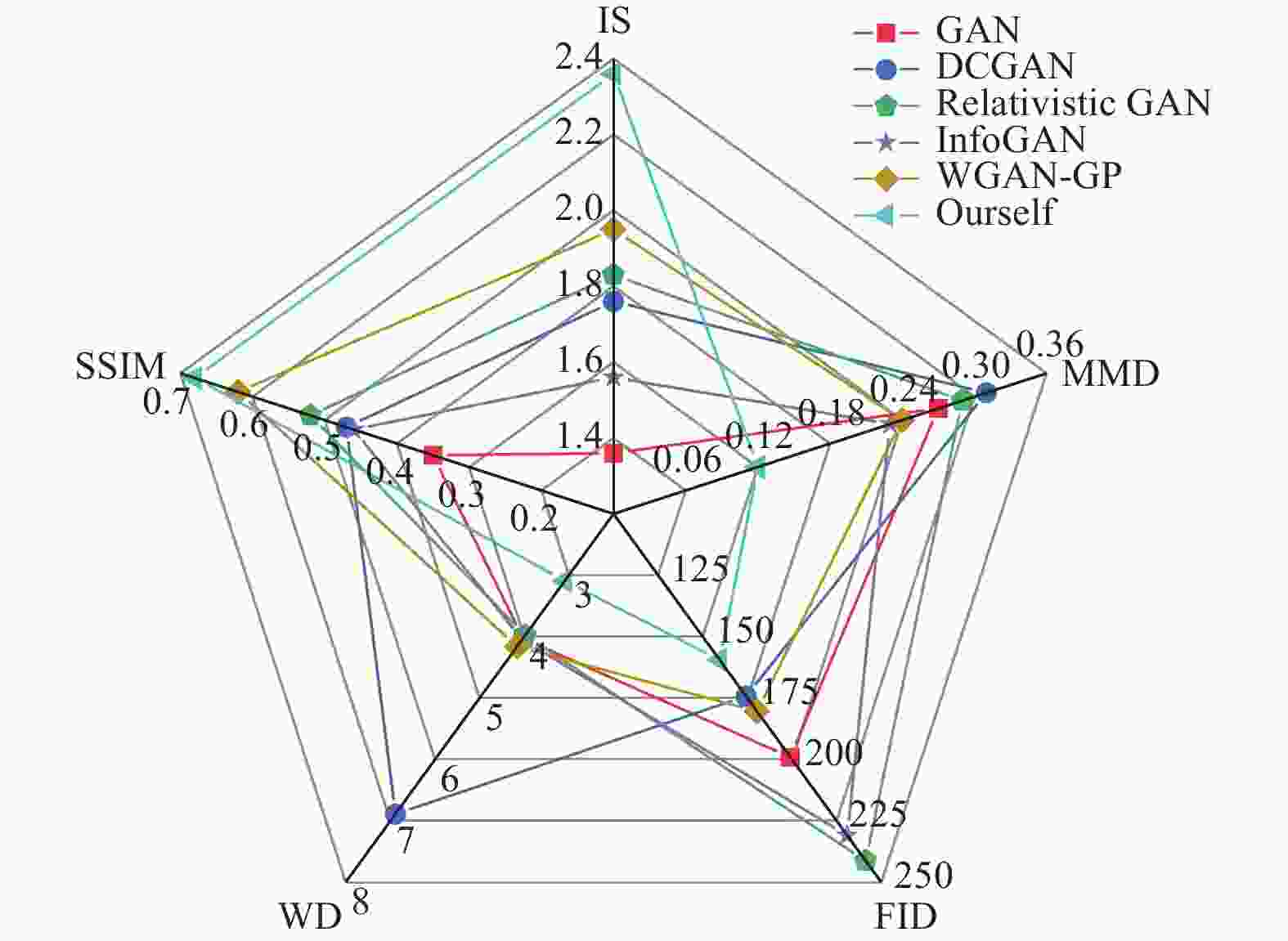

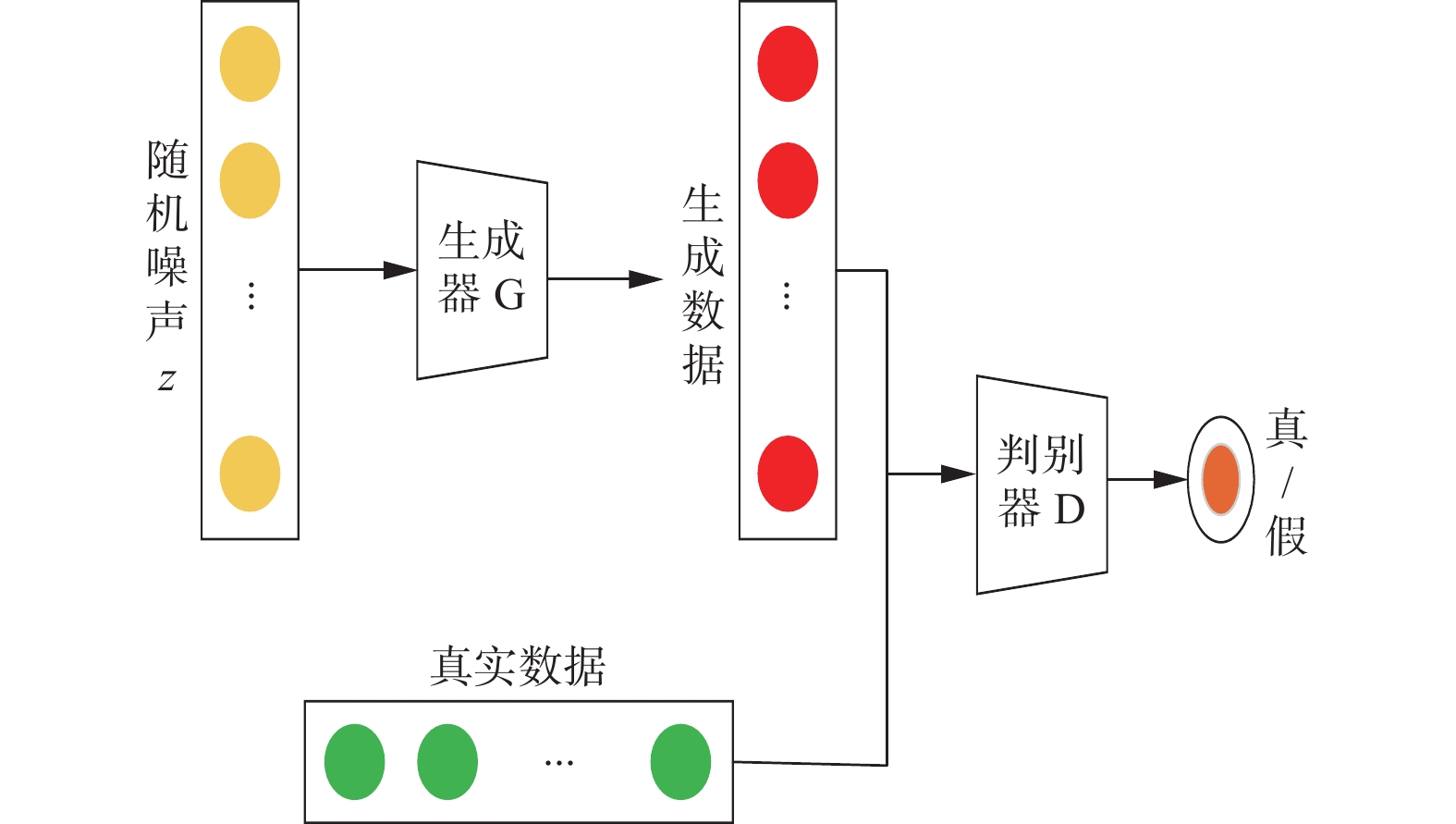

摘要: 针对自动铺丝过程中缺陷红外样本不足,获取困难,导致缺陷分类模型算法准确率低,难以有效识别的问题,提出一种注意力机制Wasserstein生成对抗网络数据增强方法。在生成器中引入CB-Attention注意力机制模块,提高捕获图像特征信息的能力,增大生成器的感受野,增强生成图像质量;采用批通道归一化,自适应地结合通道和批次维度的信息,以提高模型的训练速度和泛化能力。结果表明,所提注意力数据增强方法生成的样本具有多样性且高质量,加入小样本数据集后,有效提高了自动铺丝缺陷小样本数据集的识别准确率,验证了算法的有效性,为自动铺丝缺陷分类算法提供了数据基础。Abstract: The scarcity of infrared defect samples and the difficulty in acquiring them during the automated tape laying process result in low classification accuracy and ineffective defect identification by classification model algorithm. To address this issue, a data augmentation method based on an attention mechanism Wasserstein generative adversarial network (WGAN) was proposed. A CB-Attention module was introduced into the generator to enhance its capability to capture image feature, expand the receptive field, and improve the quality of generated images without adding extra parameters. Batch channel normalization was employed to adaptively integrate information from both channel and batch dimensions, thereby imcreasing the model's training speed and generalization ability. Experimental results demonstrate that the samples generated by the attention-based data augmentation method are diverse and of high quality. Incorporating these samples into the small dataset of automated tape laying defects significantly improves defect recongnition accuracy, which validates the ffectiveness of the proposed algorithm and lays a data foundation for automated tape laying defect classification algorithms.

-

表 1 模型训练流程策略

Table 1. Model training process strategy

模型训练伪代码 In each training iteration:

For k step do:

训练判别器其网络$ {{D}}_{\alpha } $(x)

从噪声分布$ {{P}}_{{z}}{(}{z}{)} $中抽取k个样本$ \left\{z^i\right\}_{i=1}^m $

从真实分布$ {\mathit{P}}_{{\mathrm{data}}}\left(x\right) $中抽取k个样本$ \left\{x^i\right\}_{i=1}^m $

计算真实图像 $\longrightarrow \operatorname{loss}_{{\boldsymbol{D}}(\text { real })} $

计算生成图像 $ \longrightarrow \operatorname{loss}_{{\boldsymbol{D}}(\text { fake })} $

样本向量$ \left\{\lambda^i\right\}_{i=1}^m $

$\lambda_i x^i+\left(1-\lambda_i\right) G\left(z^i\right) \longrightarrow \bar{x}_{i} $

通过降序更新生成器的权重$ {{ \omega }}_{{G}} $

$ \operatorname{Adam}\left(\nabla_{\omega_G}\left(\dfrac{1}{n}\displaystyle \sum_{i=1}^n D\left(G\left(z^i\right)\right)\right), \omega_G, \mu, \rho_1, \rho_2\right) \longrightarrow \omega_{G} $

通过降序更新判成器的权重$ {{ \omega }}_{{D}} $

$\operatorname{Adam}\left(\nabla _ { \omega _ { D } } \left(\dfrac{1}{n}\displaystyle \sum_{i=1}^n D\left(x_i\right)-D\left(G\left(z^i\right)\right)+k\left\|\nabla_{\bar{x}_i} D\left(\bar{x}_i\right)\right\|^p\right), \omega_D \rho_{1^{\prime}}, \rho_2\right) $

End for

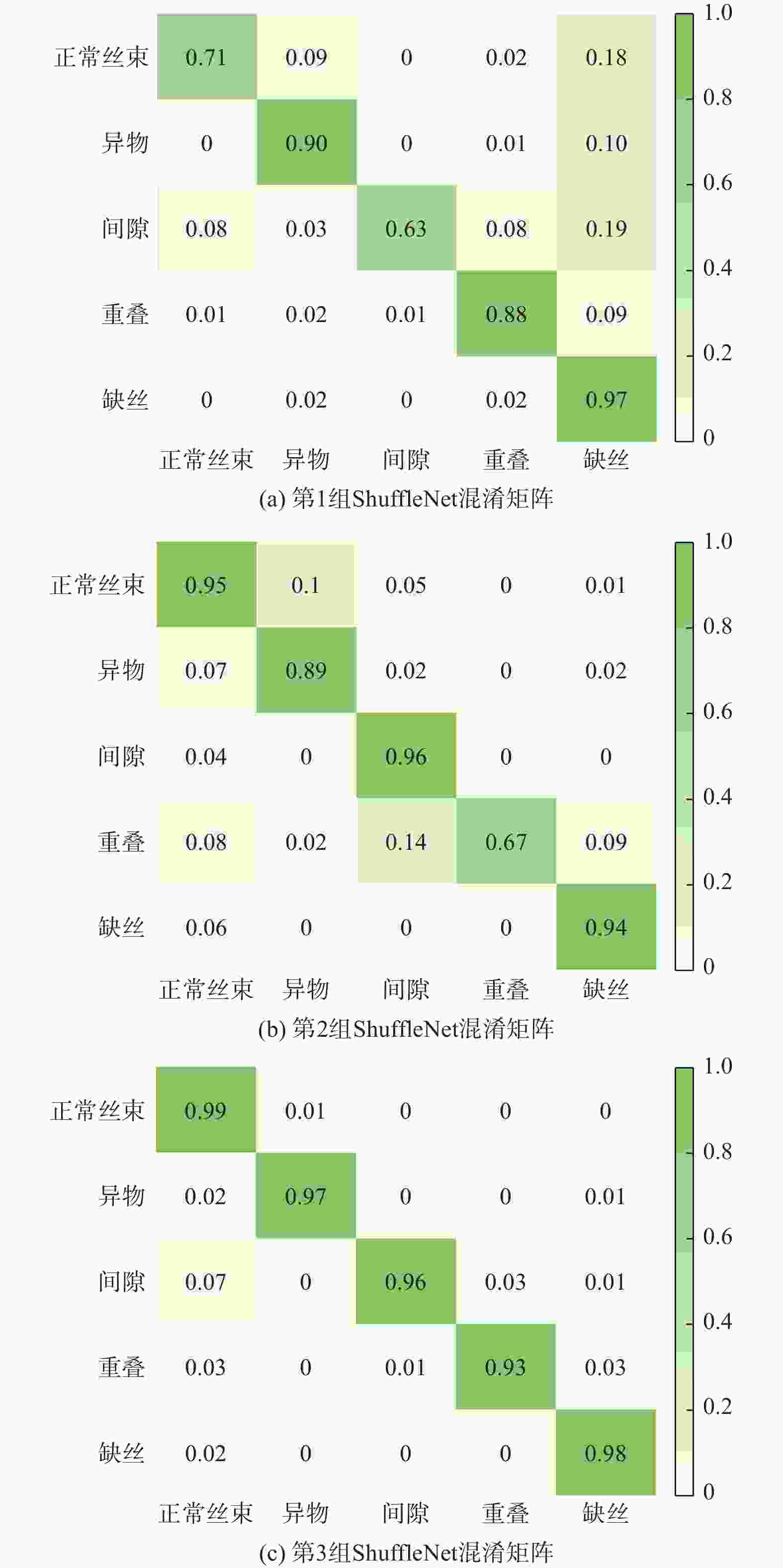

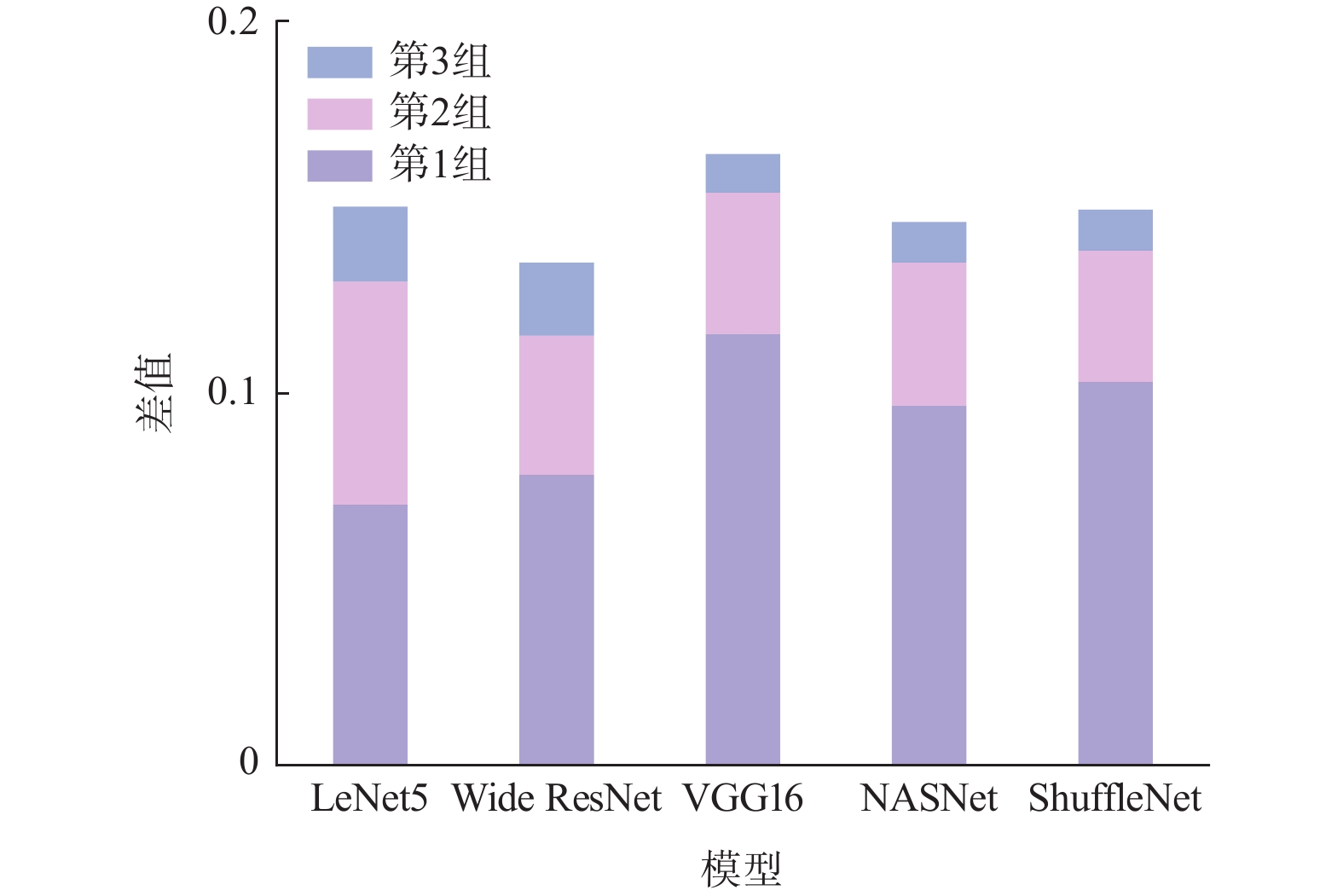

表 2 3组实验分类结果

Table 2. Classification results of three experimental groups

模型 第1组 第2组 第3组 R P F1 R P F1 R P F1 LeNet5 0.79 0.72 0.76 0.82 0.88 0.85 0.89 0.92 0.91 Wide ResNet 0.79 0.87 0.82 0.86 0.90 0.88 0.91 0.93 0.92 VGG16 0.85 0.75 0.78 0.88 0.92 0.90 0.95 0.96 0.95 NASNet 0.73 0.83 0.77 0.85 0.89 0.87 0.95 0.96 0.96 ShuffleNet 0.78 0.88 0.83 0.85 0.89 0.87 0.93 0.95 0.94 -

[1] JUAREZ P D, GREGORY E D. In situ thermal inspection of automated fiber placement for manufacturing induced defects[J] . Composites Part B: Engineering, 2021, 220: 109002. doi: 10.1016/j.compositesb.2021.109002 [2] DENKENA B, SCHMIDT C, VÖLTZER K, et al. Thermographic online monitoring system for automated fiber placement processes[J] . Composites Part B: Engineering, 2016, 97: 239 − 243. doi: 10.1016/j.compositesb.2016.04.076 [3] MEISTER S, MÖLLER N, STÜVE J, et al. Synthetic image data augmentation for fibre layup inspection processes: techniques to enhance the data set[J] . Journal of Intelligent Manufacturing, 2021, 32(6): 1767 − 1789. doi: 10.1007/s10845-021-01738-7 [4] MEISTER S, WERMES M A M, STÜVE J, et al. Explainability of deep learning classifier decisions for optical detection of manufacturing defects in the automated fiber placement process[C] //Proceedings of SPIE 11787, Automated Visual Inspection and Machine Vision IV. Germany: SPIE, 2021. DOI: 10.1117/12.2592584. [5] 刘坤, 文熙, 黄闽茗, 等. 基于生成对抗网络的太阳能电池缺陷增强方法[J] . 浙江大学学报(工学版), 2020, 54(4): 684 − 693. doi: 10.3785/j.issn.1008-973X.2020.04.007 [6] 葛轶洲, 刘恒, 王言, 等. 小样本困境下的深度学习图像识别综述[J] . 软件学报, 2022, 33(1): 193 − 210. [7] 陆福星, 陈忻, 陈桂林, 等. 背景自适应的多特征融合的弱小目标检测[J] . 红外与激光工程, 2019, 48(3): 0326002. [8] 李维鹏, 杨小冈, 李传祥, 等. 红外目标检测网络改进半监督迁移学习方法[J] . 红外与激光工程, 2021, 50(3): 20200511. [9] HARIHARAN B, GIRSHICK R. Low-shot visual recognition by shrinking and hallucinating features[C] //Proceedings of the IEEE International Conference on Computer Vision. Venice: IEEE, 2017: 3037−3046. [10] GOODFELLOW I J, POUGET-ABADIE J, MIRZA M, et al. Generative adversarial nets[C] //Proceedings of the 28th International Conference on Neural Information Processing Systems. Montreal: ACM, 2014: 2672−2680. [11] RADFORD A, METZ L, CHINTALA S. Unsupervised representation learning with deep convolutional generative adversarial networks[C] //Proceedings of the 4th International Conference on Learning Representations. San Juan: ICLR, 2016. [12] ARJOVSKY M, CHINTALA S, BOTTOU L. Wasserstein generative adversarial networks[C] //Proceedings of the 34th International Conference on Machine Learning. Sydney: ACM, 2017: 214−223. [13] CHEN K Q, CAI N, WU Z S, et al. Multi-scale GAN with transformer for surface defect inspection of IC metal packages[J] . Expert Systems with Applications, 2023, 212: 118788. doi: 10.1016/j.eswa.2022.118788 [14] WU J Q, HUANG Z W, THOMA J, et al. Wasserstein divergence for GANs[C] //Proceedings of the 15th European Conference on Computer Vision. Munich: Spring, 2018: 673−688. [15] KHALED A. BCN: batch channel normalization for image classification[C] //Proceedings of the 27th International Conference on Pattern Recognition. Kolkata: Springer, 2025: 295−308. [16] NIU J W, LIU Z G, PAN Q, et al. Conditional self-attention generative adversarial network with differential evolution algorithm for imbalanced data classification[J] . Chinese Journal of Aeronautics, 2023, 36(3): 303 − 315. doi: 10.1016/j.cja.2022.09.014 [17] HYEON-WOO N, YU-JI K, HEO B, et al. Scratching visual transformer's back with uniform attention[C] //Proceedings of the IEEE/CVF International Conference on Computer Vision. Paris: IEEE, 2023: 5784−5795. [18] 王星, 杜伟, 陈吉, 等. 基于深度残差生成式对抗网络的样本生成方法[J] . 控制与决策, 2020, 35(8): 1887 − 1894. [19] KAMMOUN A, SLAMA R, TABIA H, et al. Generative adversarial networks for face generation: a survey[J] . ACM Computing Surveys, 2023, 55(5): 94. [20] JIANG J Q, CHEN M K, FAN J A. Deep neural networks for the evaluation and design of photonic devices[J] . Nature Reviews Materials, 2021, 6(8): 679 − 700. [21] ZHOU K Y, LIU Z W, QIAO Y, et al. Domain generalization: a survey[J] . IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(4): 4396 − 4415. [22] GUI J, SUN Z N, WEN Y G, et al. A review on generative adversarial networks: algorithms, theory, and applications[J] . IEEE Transactions on Knowledge and Data Engineering, 2023, 35(4): 3313 − 3332. doi: 10.1109/TKDE.2021.3130191 [23] LECUN Y, BOTTOU L, BENGIO Y, et al. Gradient-based learning applied to document recognition[J] . Proceedings of the IEEE, 1998, 86(11): 2278 − 2324. doi: 10.1109/5.726791 [24] CHEN L Y, LI S B, BAI Q, et al. Review of image classification algorithms based on convolutional neural networks[J] . Remote Sensing, 2021, 13(22): 4712. doi: 10.3390/rs13224712 [25] POUYANFAR S, SADIQ S, YAN Y L, et al. A survey on deep learning: algorithms, techniques, and applications[J] . ACM Computing Surveys (CSUR), 2019, 51(5): 92. [26] ZOPH B, VASUDEVAN V, SHLENS J, et al. Learning transferable architectures for scalable image recognition[C] //Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City: IEEE, 2018: 8697−8710. [27] ZHANG X Y, ZHOU X Y, LIN M X, et al. ShuffleNet: an extremely efficient convolutional neural network for mobile devices[C] //Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City: IEEE, 2018: 6848−6856. -

下载:

下载: