Edible oil drums date detection based on boundary learning

-

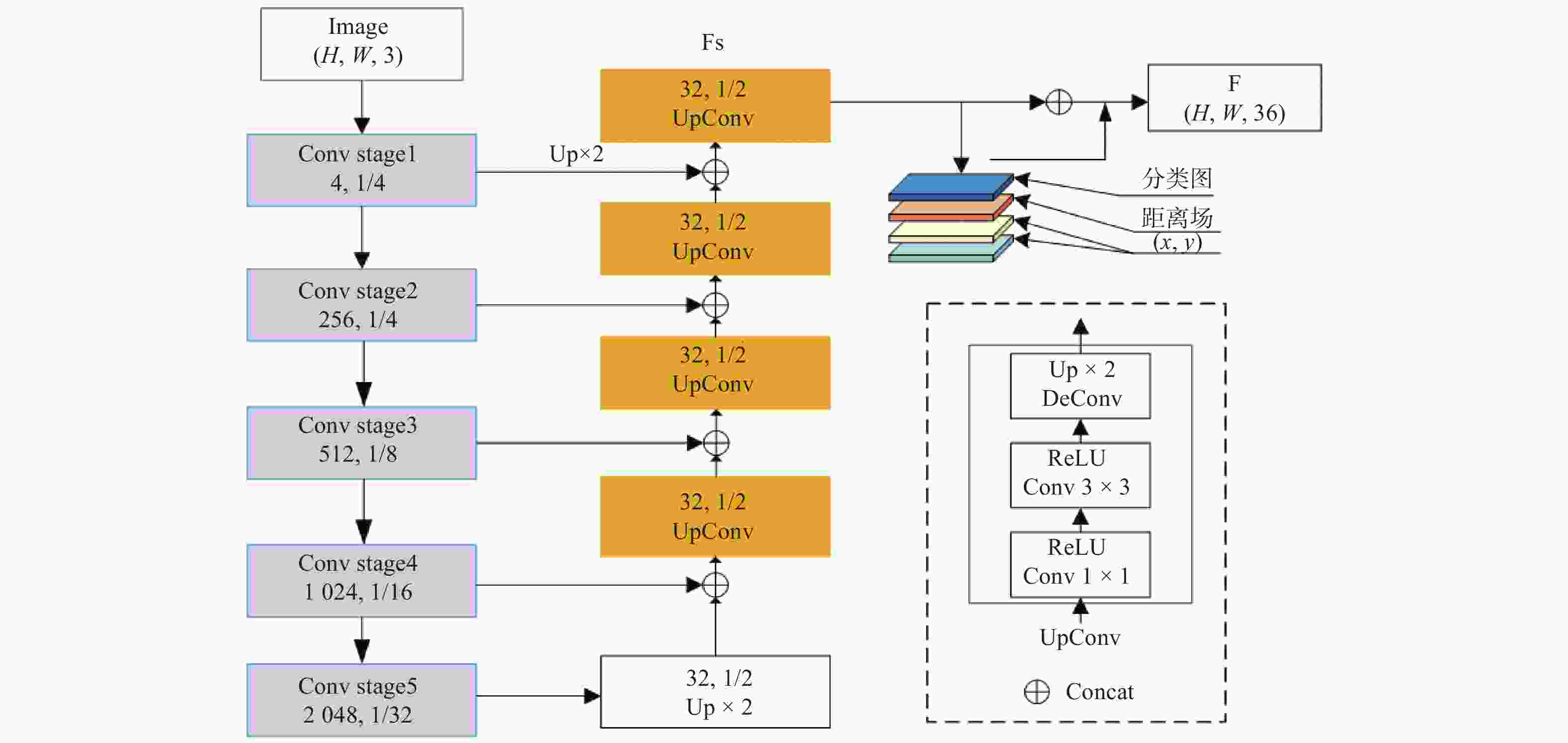

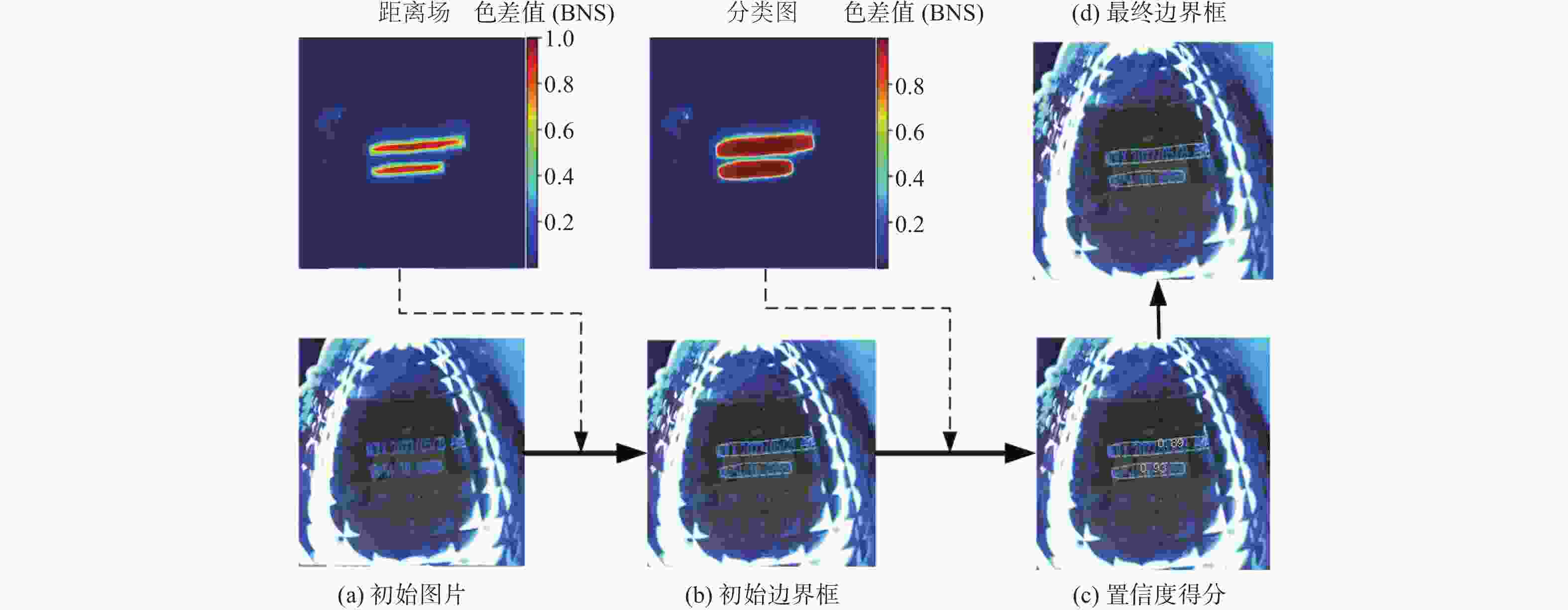

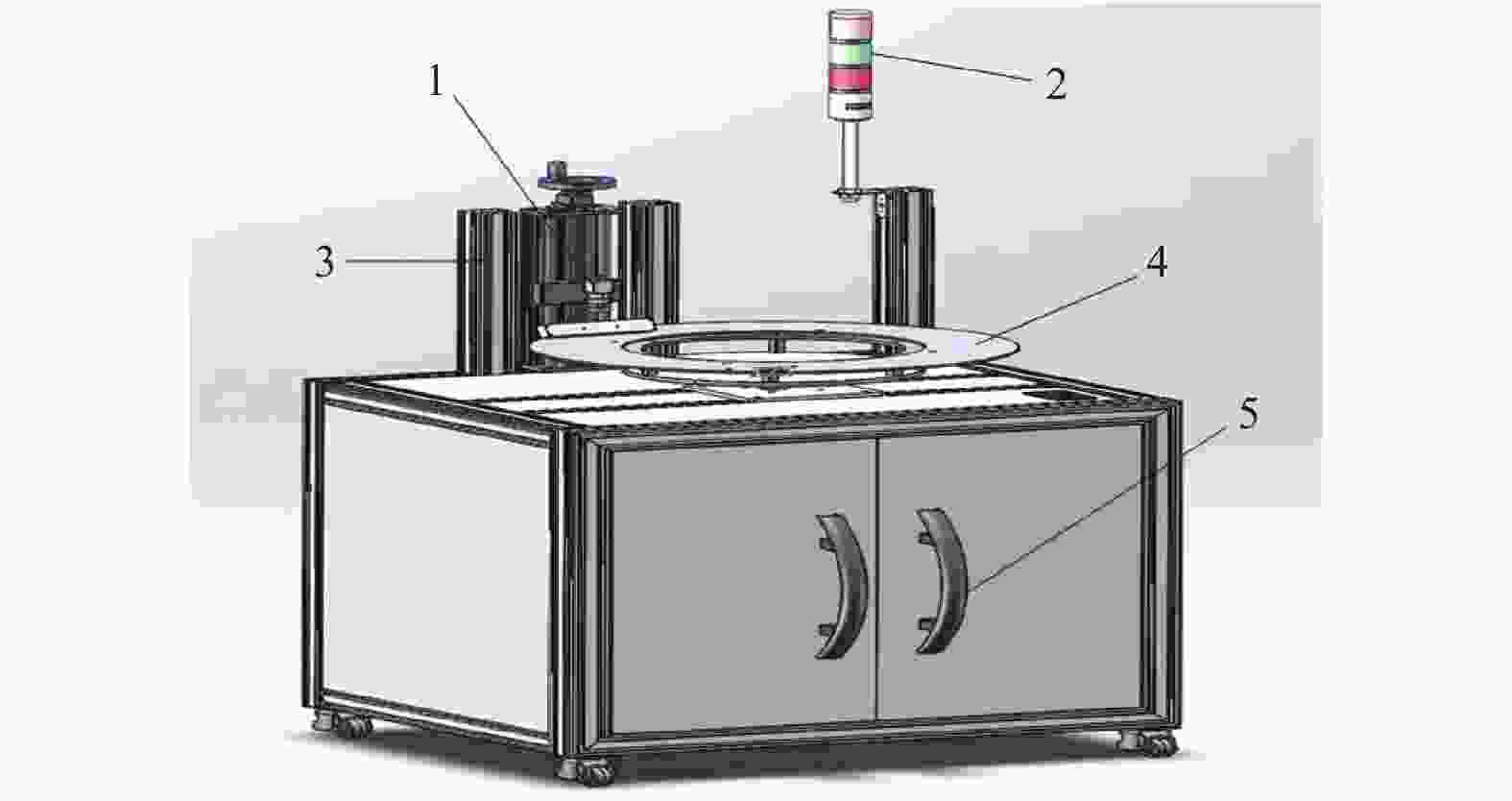

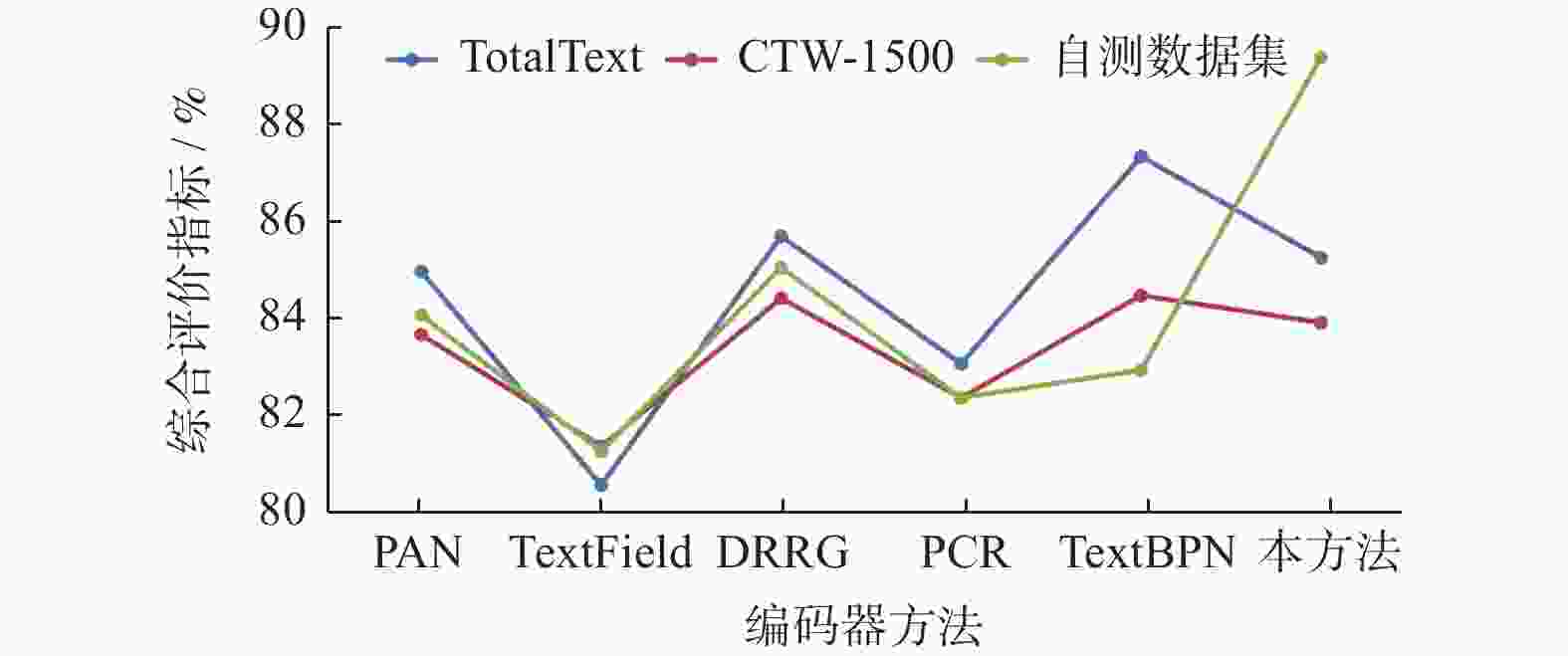

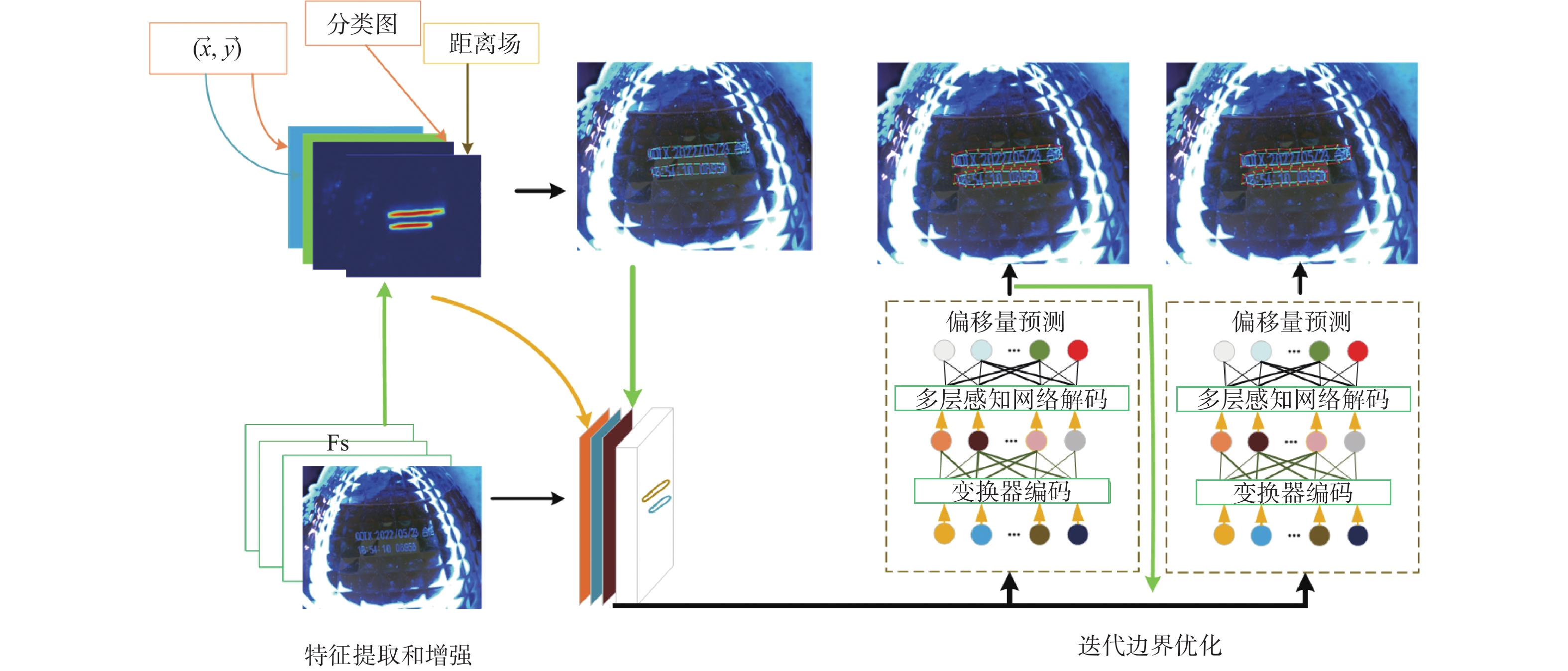

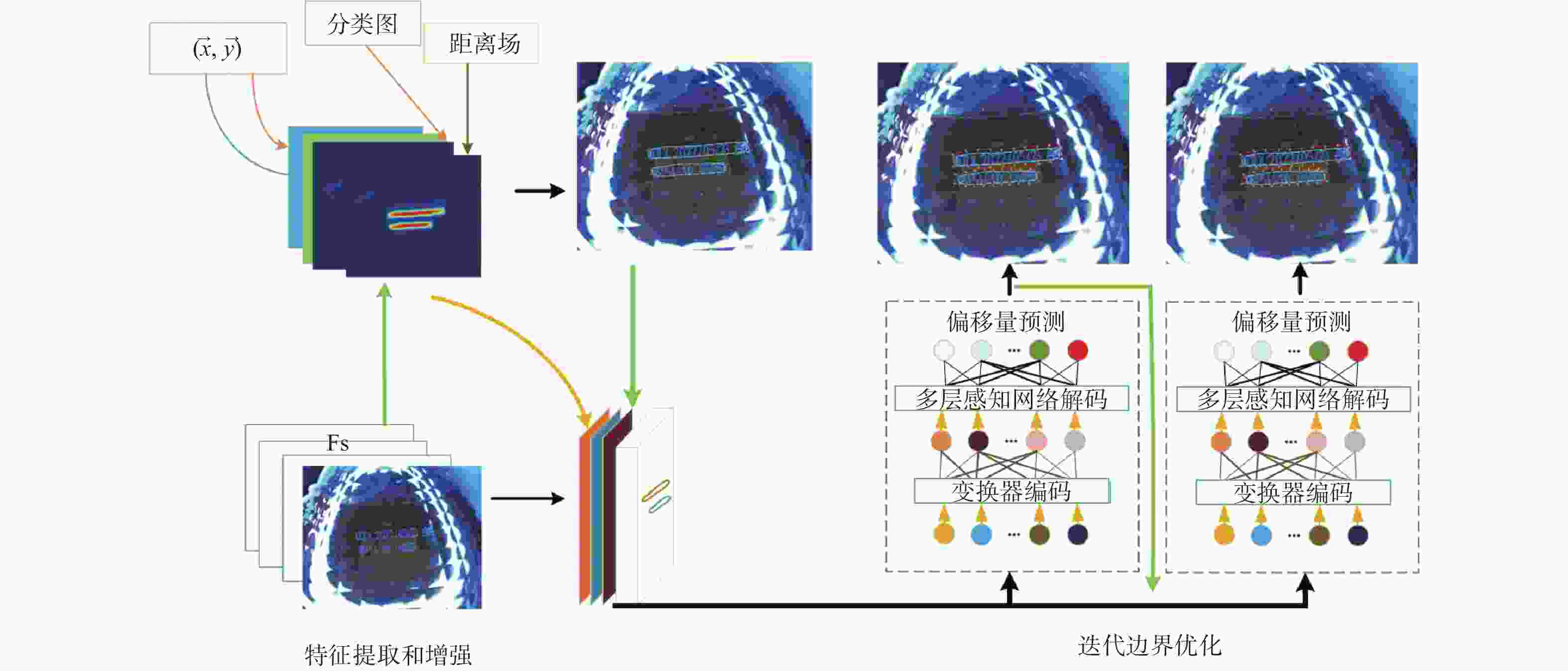

摘要: 在文本不规则形状、高反光和模糊等工业生产环境中,现有文本检测方法存在边框定位不准确以及检测形状局限于矩形框的问题。基于边界学习提出一种统一的、从宽泛到精细的检测框架,主要包括一个特征提取骨干网络、边界建议模块和迭代优化边界变换器模块。特征提取骨干网络采用ResNet网络对图片进行特征提取,由多层扩张卷积组成的边界建议模块用于生成粗略的边界框,边界变换器模块采用编码器−解码器结构,在分类图、距离场和方向场的指导下通过迭代变形逐步完善粗略的边界框。基于自测数据集进行试验,该模型在数据集上的准确率、召回率和F-measure值分别为91.56%、87.38%和89.41%,验证了本算法在食用油桶日期检测的效率和优势。Abstract: In the industrial production environments such as irregular shapes of text, high reflectivity and blurring, the existing text detection method have the problems of inaccurate border localization and the limitation of detecting text within rectangular boxes. Based on boundary learning, a unified, broad-to-fine detection framework was proposed. It mainly consists of a feature extraction backbone network, a boundary suggestion module, and an iterative optimized boundary transformer module. ResNet network was used in the feature extraction backbone network to extract features from images. The boundary suggestion module consisted of multi-layer dilation convolution was used to generate rough bounding boxes. Additionally, an encoder-decoder structure was used in boundary transformer module to gradually improve the rough bounding boxes by iterative deformation under the guidance of classification map, distance field and direction field. The results of experiments conducted based on the self-collected datasets show that the accuracy, recall and F-measure values of the model are 91.56%, 87.38% and 89.41% respectively. The efficiency and advantages of the algorithm in the date detection of edible oil drums are verified.

-

Key words:

- date detection /

- arbitrary shapes /

- boundary learning /

- machine vision

-

表 1 在自测数据集上不同编码器的性能测试

Table 1. Performance testing of different encoders on self-collected dataset

方法 自测数据集 Recall/% Precision/% F-measure/% FPS FC 78.32 85.03 81.54 11.1 RNN 81.26 86.00 83.56 12.2 CCN 80.35 84.88 82.55 10.9 GCN 80.31 86.12 83.12 11.9 本方法 81.12 88.08 84.46 14.7 表 2 在自测数据集上不同迭代次数的结果

Table 2. Results for different iterations on self-collected dataset

方法 第1次迭代 第2次迭代 第3次迭代 F-measure/% FPS/(帧·s−1) F-measure/% FPS/(帧·s−1) F-measure/% FPS/(帧·s−1) 自适应变形模块 82.24 13.7 83.33 12.8 83.97 12.1 RNN 82.16 14.0 83.36 12.9 83.56 12.2 CNN 82.12 13.1 82.35 11.2 82.55 10.9 本方法 82.72 16.0 83.63 15.3 84.20 14.7 表 3 边界能量损失在自测数据集上的试验

Table 3. Boundary energy loss experiments on self-collected dataset

方法 方向场 边界能量损失 Recall/% Precision/% F-measure/% 边界变换器 × × 82.36 89.56 85.89 √ × 83.86 90.81 87.28 × √ 84.57 89.80 87.13 √ √ 85.25 89.84 87.49 表 4 在自测数据集中的试验

Table 4. Experiments in self-collected dataset

方法 指标 PAN TextField DRRG PCR TextBPN 本方法 Recall/% 83.80 75.9 82.30 77.80 80.68 87.38 Precision/% 84.40 87.40 88.05 87.60 85.40 91.56 FPS 30.2 5.2 — — 12.7 37.8 -

[1] SHI B G, BAI X, BELONGIE S. Detecting oriented text in natural images by linking segments[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu: IEEE, 2017. DOI: 10.1109/CVPR.2017.371. [2] 胡蝶, 侯俊, 张全年等. 基于卷积神经网络的生产日期识别[J] . 电子测量技术,2020,43(1):152 − 156. doi: 10.19651/j.cnki.emt.1903233 [3] 宫鹏涵. 基于YOLOv5算法的钢印字符识别方法[J] . 兵器装备工程学报,2022,43(8):101 − 105, 124. doi: 10.11809/bqzbgcxb2022.08.015 [4] 寇文博, 屈八一, 李智奇. 一种改进Transformer的仪表字符识别算法[J] . 自动化与仪器仪表,2022(273):284 − 288. doi: 10.14016/j.cnki.1001-9227.2022.07.284 [5] JIE L M, SHAO G Q, SHEN D. A machine vision based medicine package printing three date marks detection scheme[C]//Proceedings of 2021 2nd International Conference on Artificial Intelligence and Information Systems. New York: Association for Computing Machinery, 2021. [6] LIAO M H, ZOU Z S, WAN Z Y, et al. Real-time scene text detection with differentiable binarization and adaptive scale fusion[J] . IEEE Transactions on Pattern Analysis and Machine Intelligence,2023,45(1):919 − 931. doi: 10.1109/TPAMI.2022.3155612.Epub2022Dec5 [7] ZHANG S X, ZHU X, CHEN L, et al. Arbitrary shape text detection via segmentation with probability maps[J] . IEEE Transactions on Pattern Analysis and Machine Intelligence,2022,45(3):2736 − 2750. doi: 10.1109/TPAMI.2022.3176122.Epub2023Feb3 [8] 赵景波, 邱腾飞, 朱敬旭辉,等. 基于RP-ResNet网络的抓取检测方法[J] . 计算机应用与软件,2023,40(3):210 − 216. doi: 10.3969/j.issn.1000-386x.2023.03.032 [9] 刘倩, 杨鹏, 毛红梅. 基于自适应注意力的任意形状场景文本检测[J] . 计算机工程与设计,2023,44(3):901 − 907. doi: 10.16208/j.issn1000-7024.2023.03.036 [10] 周冲浩, 顾勇翔, 彭程. 基于多尺度特征融合的自然场景文本检测[J] . 计算机应用,2022,42(S2):31 − 35. [11] ZHU Y Q, CHEN J Y, LIANG L Y, et al. Fourier contour embedding for arbitrary-shaped text detection[C]//Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition. Nashville: IEEE, 2021: 3123 − 3131. [12] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu: IEEE, 2017: 2117 − 2125. [13] 邓阿琴, 胡平霞. 基于改进卷积神经网络的食品异物自动识别方法[J] . 食品与机械,2022,38(7):133 − 137. doi: 10.13652/j.spjx.1003.5788.2022.60038 [14] ZHOU X Y, YAO C, WEN H, et al. EAST: An efficient and accurate scene text detector[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu: IEEE, 2017: 2642 − 2651. [15] LONG S B, RUAN J Q, ZHANG W J, et al. TextSnake: A flexible representation for detecting text of arbitrary shapes[C]//Proceedings of the European Conference on Computer Vision (ECCV). Munich: ECCV, 2018: 20 − 36. [16] ZHANG S X, ZHU X B, HOU J B, et al. Deep relational reasoning graph network for arbitrary shape text detection[C]//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Seattle: IEEE, 2020: 2238 − 2250. [17] XU Y C, WANG Y K, ZHOU W, et al. TextField: Learning a deep direction field for irregular scene text detection[J] . IEEE Transactions on Image Processing,2019,28(11):5566 − 5579. [18] WAN J Q, LIU Y, WEI D L, et al. Super-BPD: Super boundary-to-pixel direction for fast image segmentation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle: IEEE, 2020: 9250 − 9259. [19] LING H, GAO J, KAR A, et al. Fast interactive object annotation with Curve-GCN[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach: IEEE, 2019: 5257 − 5266. -

下载:

下载: